Recreating Star Trek’s holodeck using ChatGPT

In the realm of science fiction, Star Trek: The Next Generation introduced viewers to the holodeck, a pioneering concept where Captain Picard and his crew could simulate diverse environments ranging from lush jungles to Victorian London.

This technology was not just for recreation but served as a vital training tool aboard the U.S.S. Enterprise. Today, the echoes of this imaginative technology resonate in the field of robotics, particularly through a process known as ‘Sim2Real’.

Yue Yang, a doctoral student under the supervision of Mark Yatskar and Chris Callison-Burch, Assistant and Associate Professors in Computer and Information Science (CIS), respectively, highlighted a significant challenge in developing virtual environments: “Artists manually create these environments. Those artists could spend a week building a single environment,” Yang explained. This meticulous process involves numerous decisions about layout, object placement, and colour schemes.

The scarcity of virtual environments is a bottleneck in training robots to navigate our complex real world. Chris Callison-Burch elaborated on the requirements for training today's artificial intelligence (AI) systems: “Generative AI systems like ChatGPT are trained on trillions of words, and image generators like Midjourney and DALLE are trained on billions of images. We only have a fraction of that amount of 3D environments for training so-called ‘embodied AI.’ If we want to use generative AI techniques to develop robots that can safely navigate in real-world environments, then we will need to create millions or billions of simulated environments.”

In response to this need, a collaborative effort between researchers at Stanford, the University of Washington, and the Allen Institute for Artificial Intelligence (AI2), along with Callison-Burch, Yatskar, Yang, and Lingjie Liu (Aravind K. Joshi Assistant Professor in CIS), has given rise to the Holodeck. This system, inspired by its ‘Star Trek’ namesake, can generate a virtually limitless array of interactive 3D environments. Yang shared: “We can use language to control it. You can easily describe whatever environments you want and train the embodied AI agents.”

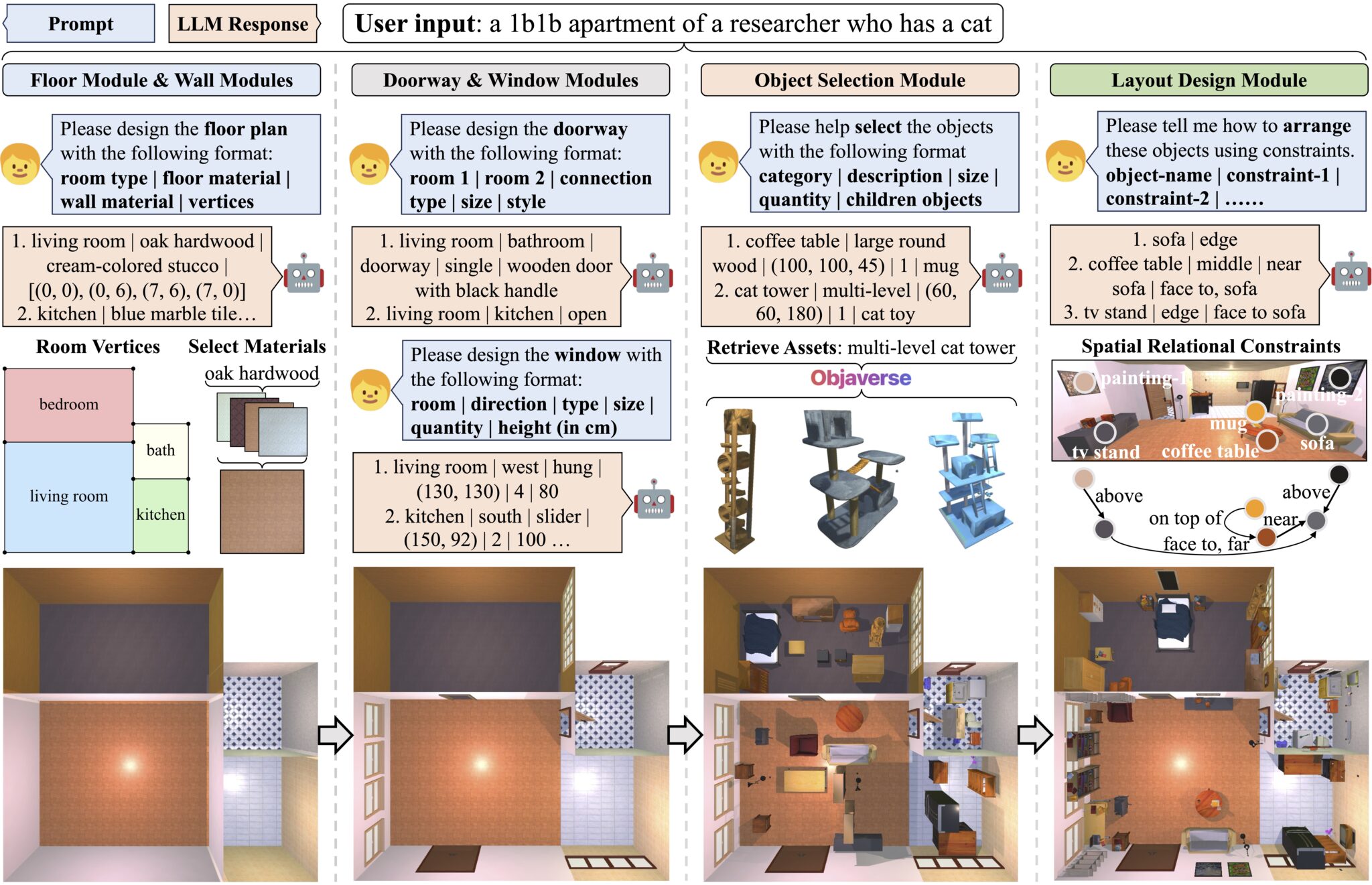

Holodeck employs large language models (LLMs) to process user requests into specific environment parameters through a series of structured queries. This enables it to simulate various settings, from apartments to public spaces, with precision. The system further utilises Objaverse, a comprehensive library of digital objects, and a specially designed layout module to ensure realistic placement of items within the scene.

Researchers assessed the effectiveness of Holodeck against ProcTHOR, an earlier tool, by generating 120 scenes with each and conducting surveys among Penn Engineering students. The feedback was overwhelmingly in favour of Holodeck, citing better asset selection, layout coherence, and general preference.

Moreover, Holodeck's potential was tested by fine-tuning an embodied AI agent with scenes it generated. This preparation significantly improved the agent's performance in unfamiliar environments. For example, the agent's success in locating a piano in a music room jumped from 6% to over 30% when trained with scenes from Holodeck.

Yue Yang reflected on the broader implications of this technology: “This field has been stuck doing research in residential spaces for a long time. But there are so many diverse environments out there – efficiently generating a lot of environments to train robots has always been a big challenge, but Holodeck provides this functionality.”

In June, the team will present their findings and the capabilities of Holodeck at the 2024 IEEE and CVF Computer Vision and Pattern Recognition (CVPR) Conference in Seattle, Washington. This development not only paves the way for more advanced robotic training but also brings us one step closer to the futuristic visions portrayed in Star Trek.