Software defined power for data centres

Building more data centers or expanding the capacity of existing ones is all very well but with it comes a widespread concern over the energy consumed by IT behemoths, warns Mark Adams, senior VP, CUI

The IT industry continues to face a growing demand for data centre processing and storage capacity as businesses and consumers come to appreciate the benefits the Cloud can offer. Businesses can avoid the capital cost and overhead of running their own IT operations, while having access to information from any location and the potential for enhanced data analysis services. Consumers are living their lives online - not just through social media and photo-sharing applications. They entrust their finances, bills and other information to web-based services, relieved of the worry of losing data due to hard-drive failure or the ravages of theft, fire or flood.

Building more data centres or expanding the capacity of existing ones comes a widespread concern over the energy consumed. While technology advances make it possible to pack more processing power or memory into a space, this simply increases system power density when often it is the total power capacity that is the most constrained resource. The ability to keep all the equipment racks cool poses an additional energy demand. What is needed is a way to unlock the power capacity that is often dedicated to providing redundancy for mission-critical operations or to the need to provision for peak demand. The answer is Software Defined Power.

Availability without redundancy

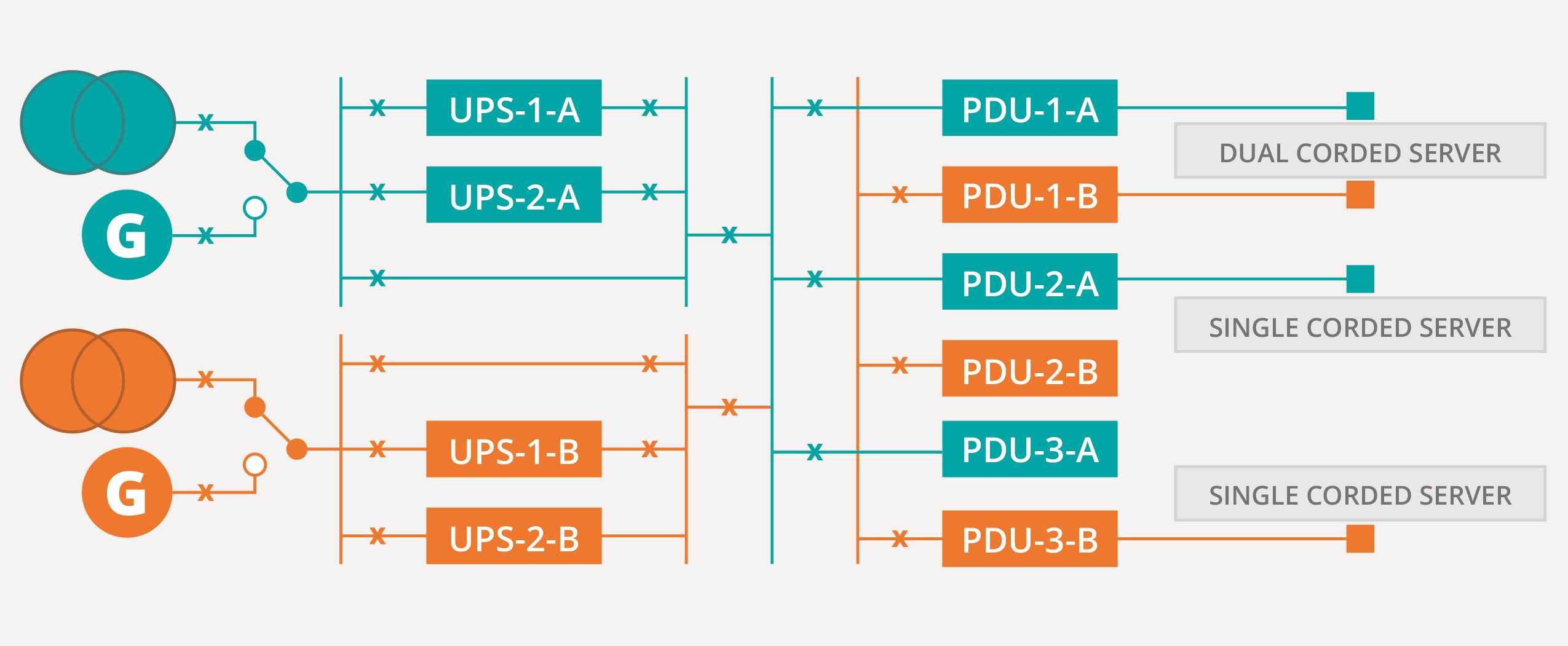

To ensure high availability for processing mission-critical tasks, the power supply architecture employed by a tier-3 or tier-4 data centre requires 100% redundancy. This is shown in Figure 1, where each of the power supply elements, from utility source or generator input, through UPS back-up, and on to the distribution units that power the individual servers, is duplicated. For dual-corded servers, this provides the necessary redundancy, but for single-corded servers handling less critical workloads, such as test or development tasks, half their power provision is not required.

Figure 1: Tier-3 and tier-4 data centres require a 100% power redundancy architecture

Even if just 30% of a data centre’s workload was non-critical, 15% of its total power capacity could be freed to power additional servers. Clearly, the answer here is to manage power at a higher level, using software controls that know which servers are running critical tasks and which are not, and connect power accordingly, ensuring redundancy only where it is truly required.

Peak service without peak power

Modern processors incorporate a raft of power saving features. The power consumed when a CPU is idle can be significantly less than when it is operating at 100% utilisation. This difference is magnified through the server and rack level, making power capacity planning for an entire data centre challenging, especially when the nature of a server’s workload can exacerbate the variability in power consumption. Google, for example, has reported that the average-to-peak power ratio for webmail is around 90%, whereas the ratio for servers performing web search tasks is much lower at 73%.

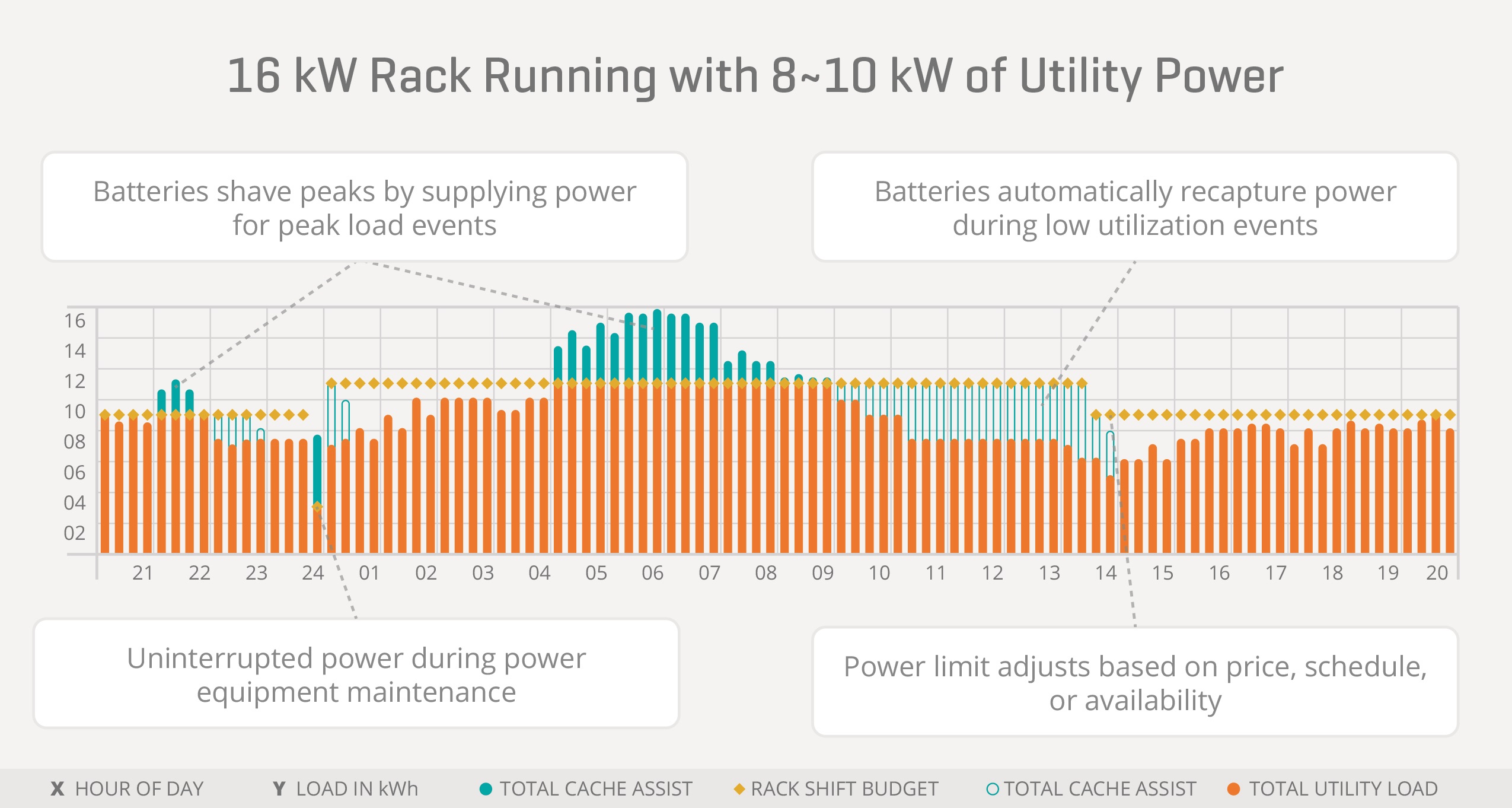

Figure 2: Overcoming load fluctuations with battery storage lowers peak utility power demand

Figure 2: Overcoming load fluctuations with battery storage lowers peak utility power demand

Consequently, even provisioning data centre power capacity, based on the highest average-to-peak ratio, can result in a considerable under-utilisation. This is made worse when planners include a safety buffer in the power provisioning to cover the possibility that actual peak power demands may exceed what they have modeled. This, in part, is why the average power utilisation in data centres worldwide today is still below 40%, even before allowing for redundancy provisions.

Overcoming this tendency to over-provision, “just in case”, requires a greater understanding of dynamic power usage with the ability to either redistribute loads, not just between servers but also potentially in time i.e. scheduling less time-critical tasks to quieter periods during the day. Figure 3 illustrates the problem and shows how a technique known as peak shaving can even out demand.

Figure 3: CUI’s rack-mount ICE hardware for intelligent power switching and battery storage

Battery storage provides a more responsive solution than supplementing utility power with local generating sets. The latter is only really suitable for situations where demand peaks have a relativity long duration and occur at predictable times. Again though, intelligent software is needed to switch loads to battery supplies as demand ramps and recharge batteries during lower utilisation periods.

Software flexibility

The key to successfully managing the provision of data center power is understanding server workloads. This means knowing which are mission- and/or time-critical and require redundant supplies, including generating sets and UPS back-ups, and which are less critical and can be rescheduled to quieter times or can be restarted if interrupted by a supply failure. With appropriate power system management, data centre operators should be able to overcome their constant fear of “running out of power”. Better still, intelligent control allows redundant or under-utilised power capacity to be unlocked, enabling data processing and storage capacity to be increased, without having to install extra power capacity.

The deployment of Software Defined Power (SDP) makes this possible. SDP builds on the use of digital power, where the normal feedback loop that regulates the output of a power supply is digitally controlled. Data centre power architectures typically convert power from AC to DC and then distribute it at progressively lower voltages from server rack to server and finally to the CPUs and other circuits. Digital control allows these intermediate and final load voltages to be adjusted to optimise the efficiency of each of the supply stages. SDP takes this a step further with techniques that can monitor and control the loading of all the power supplies.

SDP and intelligent control

In partnership with Virtual Power Systems, CUI has implemented the peak shaving concept in a novel SDP solution for IT systems. Its Intelligent Control of Energy (ICE) system combines hardware and software elements to maximise capacity utilisation and optimise performance. Various hardware modules, such as rack-mount battery storage and switching units, can be placed at strategic power control locations in the data centre to facilitate software decisions on power sourcing. The ICE operating system collects telemetry data from ICE and other infrastructure hardware to support real-time control, using power optimisation algorithms to release redundant capacity and smooth supply loading.

A trial with a leading data center operator revealed the potential to unlock 16MW of power from an installed capacity of 80MW, using ICE hardware. This upgrade took a fraction of the time it would have required to install an additional 16MW of supply capacity and for a quarter of the capital expenditure cost, to say nothing of the benefit of reduced ongoing operating expenditure.