AI and the road to full autonomy in autonomous vehicles

The road to fully autonomous vehicles is, by necessity, a long and winding one; systems that implement new technologies that increase the driving level of vehicles (driving levels being discussed further below) must be rigorously tested for safety and longevity before they can make it to vehicles that are bound for public streets.

The network of power supplies, sensors, and electronics that is used for Advanced Driver Assistance Systems (ADAS) – features of which include emergency braking, adaptive cruise control, and self-parking systems – is extensive, with the effectiveness of ADAS being determined by the accuracy of the sensing equipment coupled with the accuracy and speed of analysis of the on-board autonomous controller.

The on-board analysis is where artificial intelligence comes into play and is a crucial element to the proper functioning of autonomous vehicles. In IDTechEx’s recent report on AI hardware at the Edge of the network, ‘AI Chips for Edge Applications 2024 – 2034: Artificial Intelligence at the Edge’, AI chips (those pieces of semiconductor circuitry that are capable of efficiently handling machine learning workloads) are projected to generate revenue of more than $22 billion by 2034, and the industry vertical that is to see the highest level of growth over the next ten year period is the automotive industry, with a compound annual growth rate (CAGR) of 13%.

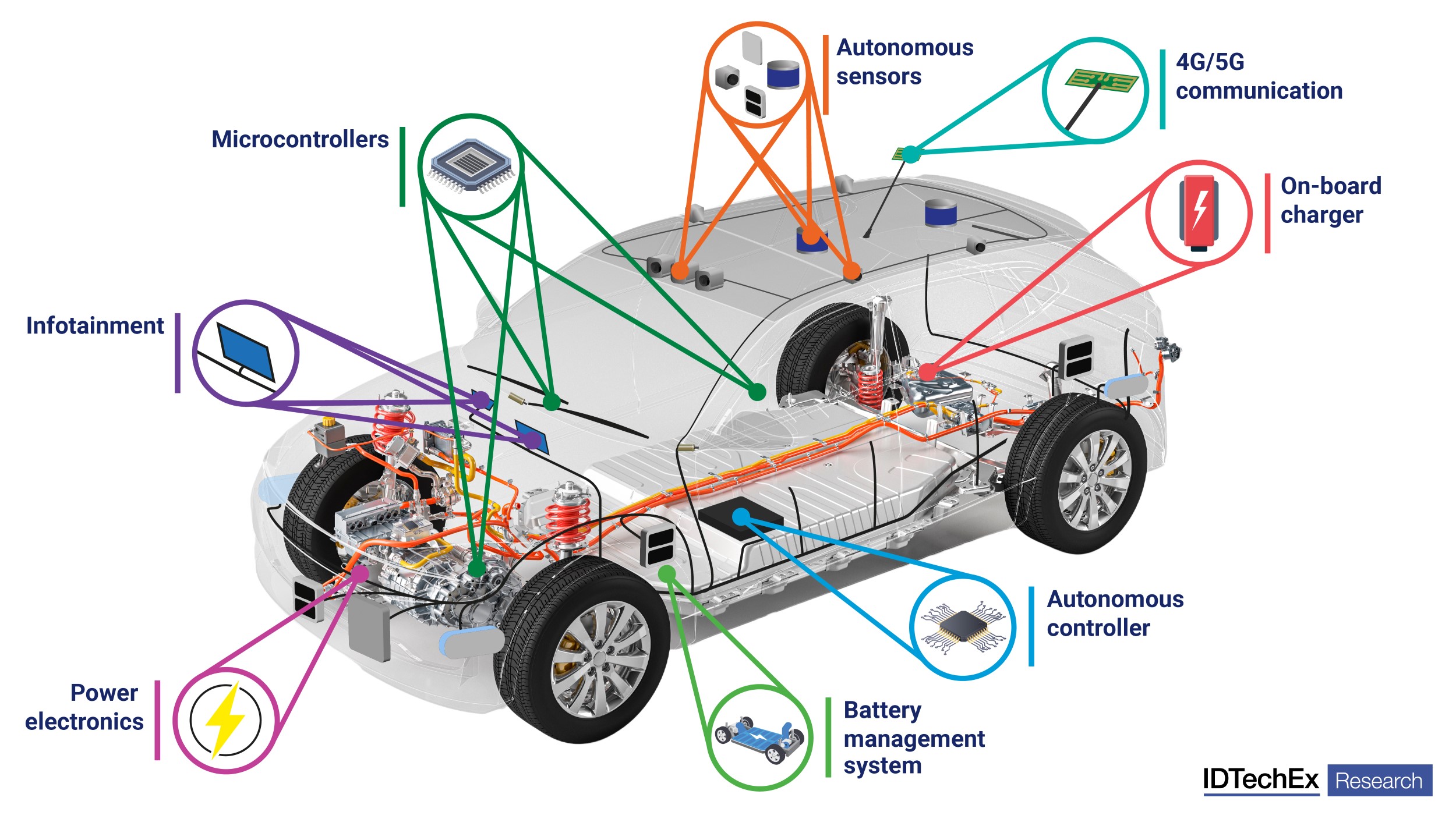

Circuitry and electrical components within a car, many of which work together to comprise ADAS. Source: IDTechEx

Circuitry and electrical components within a car, many of which work together to comprise ADAS. Source: IDTechEx

The part that AI plays

The AI chips used by automotive vehicles are found in centrally located microcontrollers (MCUs), which are, in turn, connected to peripherals such as sensors and antennae to form a functioning ADAS. On-board AI compute can be used for several purposes, such as driver monitoring (where controls are adjusted for specific drivers, head and body positions are monitored in an attempt to detect drowsiness, and the seating position is changed in the event of an accident), driver assistance (where AI is responsible for object detection and appropriate corrections to steering and braking), and in-vehicle entertainment (where on-board virtual assistants act in much the same way as on smartphones or in smart appliances). The most important of the avenues listed above is the latter, driver assistance, as the robustness and effectiveness of the AI system determines the vehicle's autonomous driving level.

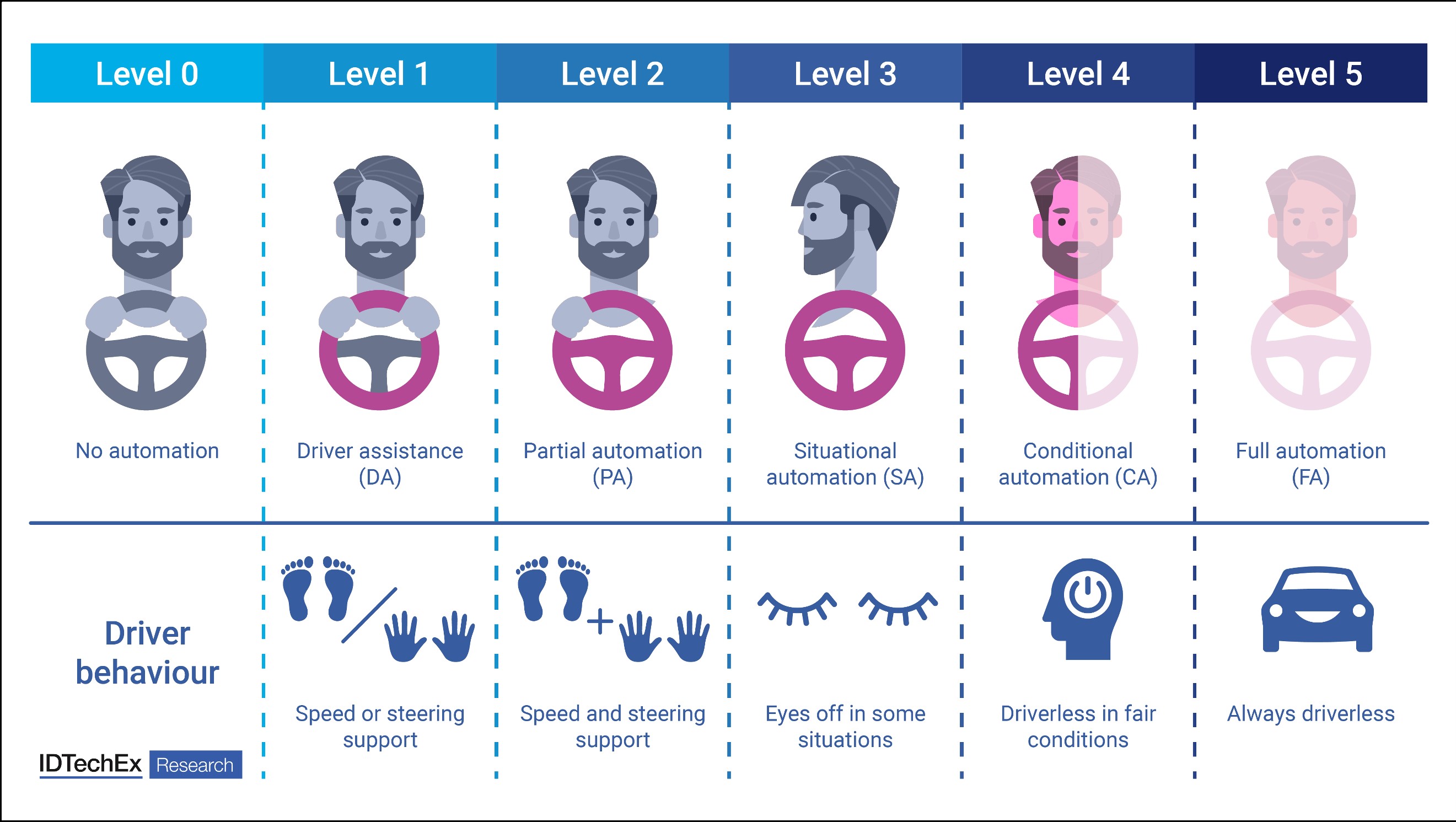

Since its launch in 2014, the SAE Levels of Driving Automation (shown below) have been the most-cited source for driving automation in the automotive industry, which defines the six levels of driving automation. These range from Level 0 (no driving automation) to Level 5 (full driving automation). The current highest state of autonomy in the private automotive industry (incorporating vehicles for private use, such as passenger cars) is SAE Level 2, with the jump between Level 2 and Level 3 being significant, given the relative advancement of technology required to achieve situational automation.

The SAE levels of driving automation. Source: IDTechEx

The SAE levels of driving automation. Source: IDTechEx

A range of sensors installed in the car – where those rely on LiDAR (Light Detection and Ranging) and vision sensors, among others – relay important information to the main processing unit in the vehicle. The compute unit is then responsible for analysing this data and making the appropriate adjustments to steering and braking. In order for processing to be effective, the machine learning algorithms that the AI chips employ must be extensively trained prior to deployment. This training involves the algorithms being exposed to a great quantity of ADAS sensor data, such that by the end of the training period they can accurately detect objects, identify objects, and differentiate objects from one another (as well as objects from their background, thus determining the depth of field). Passive ADAS is where the compute unit alerts the driver to necessary action, either via sounds, flashing lights, or physical feedback. This is the case in reverse parking assistance, for example, where proximity sensors alert the driver to where the car is in relation to obstacles. Active ADAS, however, is where the compute unit makes adjustments for the driver. As these adjustments occur in real time and need to account for varying vehicle speeds and weather conditions, it is of great importance that the chips that comprise the compute unit are able to make calculations quickly and effectively.

A scalable roadmap

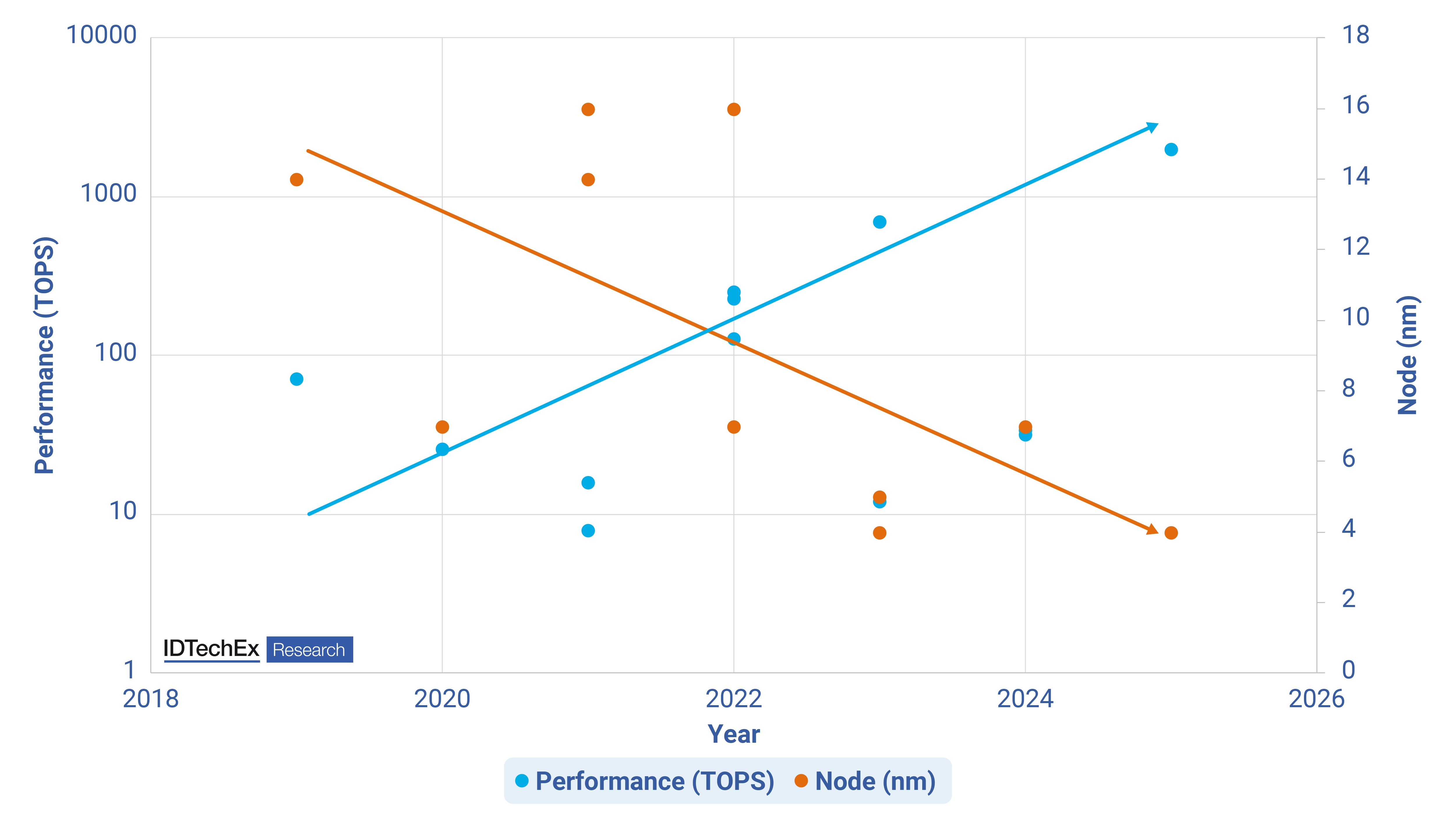

The trends for automotive SoCs is for performance to increase with each year while node process moves toward the most leading edge. Source: IDTechEx

The trends for automotive SoCs is for performance to increase with each year while node process moves toward the most leading edge. Source: IDTechEx

SoCs for vehicular autonomy have only been around for a relatively short amount of time, yet it is clear that there is a trend towards smaller node processes, which aid in delivering higher performance. This makes sense logically, as higher levels of autonomy will necessarily require a greater degree of computation (as the human computational input is effectively outsourced to semiconductor circuitry). The above graph collates the data of 11 automotive SoCs, one of which was released in 2019, while others are scheduled for automotive manufacturers’ 2024 and 2025 production lines. Among the most powerful of the SoCs considered are the Nvidia Orin DRIVE Thor, which is expected in 2025, where Nvidia is asserting a performance of 2,000 Trillion Operations Per Second (TOPS), and the Qualcomm Snapdragon Ride Flex, which has a performance of 700 TOPS and is expected in 2024.

Moving to smaller node sizes requires more expensive semiconductor manufacturing equipment (particularly at the leading edge, as Deep Ultraviolet and Extreme Ultraviolet lithography machines are used) and more time-consuming manufacture processes. As such, the capital required for foundries to move to more advanced node processes proves a significant barrier to entry to all but a few semiconductor manufacturers. This is a reason that several IDMs are now outsourcing high-performance chip manufacture to those foundries already capable of such fabrication.

In order to keep costs down for the future, it is also important for chip designers to consider the scalability of their systems, as the stepwise movement of increasing autonomous driving level adoption means that designers that do not consider scalability at this juncture run the risk of spending more for designs at ever-increasing nodes. Given that 4nm and 3nm chip design (at least for the AI accelerator portion of the SoC) likely offers sufficient performance headroom up to SAE Level 5, it behooves designers to consider hardware that is able to adapt to handling increasingly advanced AI algorithms.

It will be some years until we see cars on the road capable of the most advanced automation levels proposed above, but the technology to get there is already gaining traction. The next couple of years, especially, will be important ones for the automotive industry.

Report coverage

IDTechEx forecasts that the global AI chips market for Edge devices will grow to $22.0 billion by 2034, with AI chips for automotive accounting for more than 10% of this figure. IDTechEx’s report gives analysis pertaining to the key drivers for revenue growth in edge AI chips over the forecast period, with deployment within the key industry verticals – consumer electronics, industrial automation, and automotive – reviewed. Case studies of automotive players’ leading system-on-chips (SoCs) for ADAS are given, as are key trends relating to performance and power consumption for automotive controllers.

More generally, the report covers the global AI Chips market across eight industry verticals, with 10-year granular forecasts in six different categories (such as by geography, by chip architecture, and by application). IDTechEx’s report ‘AI Chips for Edge Applications 2024 – 2034: Artificial Intelligence at the Edge’ answers the major questions, challenges, and opportunities the edge AI chip value chain faces. For further understanding of the markets, players, technologies, opportunities, and challenges, please refer to the report.

For more information on this report, please visit www.IDTechEx.com/Research/AI.