Cameras will be able to capture pictures around corners

The Morgridge Institute for Research and University of Wisconsin-Madison researchers are working to optimise a camera capable of a slick optical trick: Snapping pictures around corners. The imaging project, supported by a new $4.4 million grant from the U.S. Defense Department’s Advanced Research Projects Agency (DARPA), will work over four years to explore the limitations and potential applications of scattered-light technology that can recreate scenes outside human line of sight.

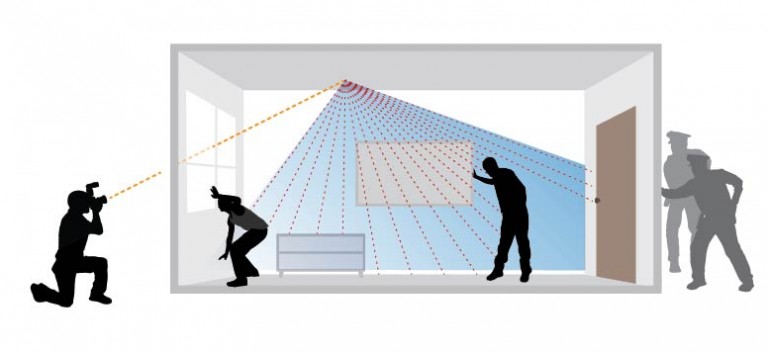

The technology, pioneered by Morgridge imaging specialist Andreas Velten, uses pulses of scattered light photons that bounce through a scene and are recaptured by finely tuned sensors connected to the camera. Information from this scattered light helps the researchers digitally rebuild a 3D environment that is either hidden or obstructed from view.

While in its infancy, the technology has generated excitement about potential applications in medical imaging, disaster relief, navigation, robotic surgery and national security. Some applications, if possible at all, may be far into the future. UW-Madison is one of eight university teams receiving 2016 DARPA grants to probe different forms of non-line-of-sight imaging.

Velten, also a scientist with the UW-Madison Laboratory for Optical and Computational Instrumentation (LOCI), first demonstrated non-line-of-sight imaging in 2012 with the Massachusetts Institute of Technology. The technique was able to recreate human figures and other shapes that were positioned around corners.

He is now partnering with Mohit Gupta, UW-Madison assistant professor of computer sciences, to see how far they can push the quality and complexity of these pictures — a fundamental step before devices can become reality.

Questions include: Can they recapture movement? Can they determine what material an object is made of? Can they differentiate between very similar shapes? Can they push this method further to see around two, three, or more corners?

Velten and Gupta have developed a theoretical framework to determine how complex a scene they can recapture. They are creating models where they bounce light a half-dozen or more times through a space to capture objects that are either hidden or outside the field of view.

“The more times you can bounce this light within a scene, the more possible data you can collect,” Velten says. “Since the first light is the strongest, and each proceeding bounce gets weaker and weaker, the sensor has to be sensitive enough to capture even a few photons of light.”

Gupta brings expertise in computer vision to the project and will be developing methods to make better sense of the limited data coming back from these techniques. Gupta will work to develop algorithms that can better decode the data that helps recreate scenes.

“The information we will get is going to be noisy and the shapes will be blob-like, not much to the naked eye, so the visualisation part of this will be huge,” Gupta says. “Because this problem is so new, we don’t even know what’s possible.”

The first two years of the grant will be devoted to the pushing the limitations of this technique. The second two years will focus on hardware development to make field applications possible.

‘Seeing around corners’ technology could help emergency personnel identify people in danger during fires or natural disasters.

Some of the possibilities are tantalising. For example, Gupta says it could be used for safety tests of jet engines, examining performance while the engines are running. It could also be used to probe impossible-to-see spaces in shipwrecks such as the Titanic. Velten currently has a NASA project examining whether the technology can be used to probe the dimensions of moon caves.

Another application could provide law enforcement with better threat assessment tools in hostile situations.

Interestingly, the light measurements captured in this project would be pretty useless for standard photography. Regular cameras rely on the opening burst of light on the subject, while this project focuses on the indirect light that comes later and scatters and bounces through the scene.

“We are interested in capturing exactly what a conventional camera doesn’t capture,” he says.

Other university partners on the project include Universidad de Zaragoza in Spain, Columbia University, Politecnico di Milano in Italy, and the French-German Research Institute in St. Louis, France.