NVIDIA GTC 2024 AI and Cloud key product announcements

NVIDIA is all the rage as GTC 2024 kicks off with generative AI and Cloud computing dominating announcements, here we will explore the NVIDIA GTC 2024 AI and Cloud key announcements so you can keep on top of all the exciting developments.

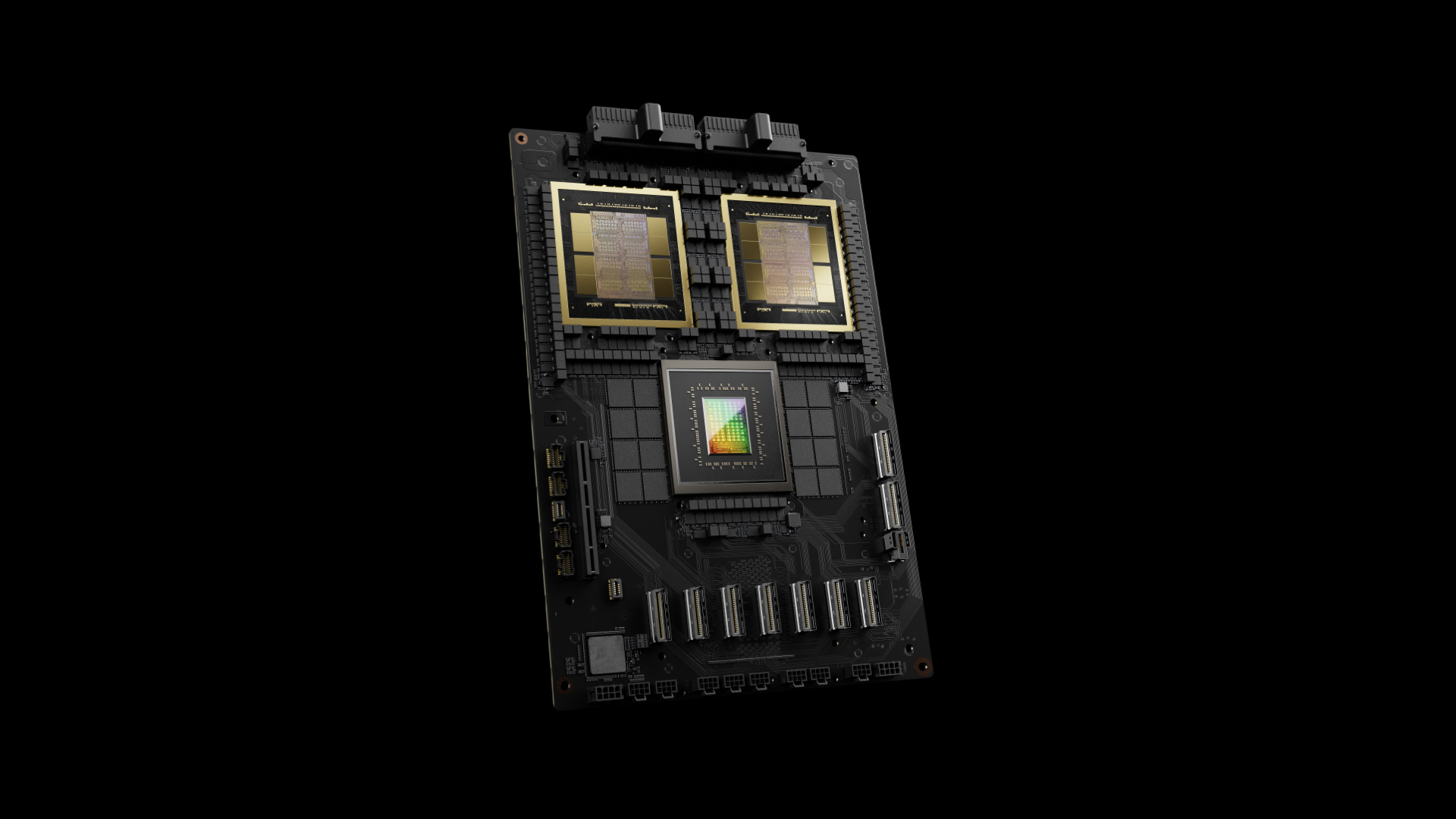

The arrival of the NVIDIA Blackwell Platform

The arrival of the NVIDIA Blackwell Platform comes as no surprise to those following the tech giant, with the platform poised to power the ‘new era of computing,’ as touted by Jensen Huang, Founder and CEO of NVIDIA.

Here’s what you need to know:

- The new Blackwell GPU, supported by NVLink and Resilience Technologies is enabling trillion-parameter-scale AI models.

- New Tensor Core alongside TensorRT- LLM compliers and massively reducing LLM inference operating costs and energy by up to a staggering 25x.

- Breakthroughs in data processing, engineering simulations, electronic design automation, computer-aided drug design, and quantum computing are being enabled by these new accelerators.

- This new platform will be, and already is, seeing widespread adoption by almost all major Cloud providers, server creators, and AI companies, including but not limited to Microsoft, Oracle, AWS, and Google Cloud.

Read more on the announcement, here.

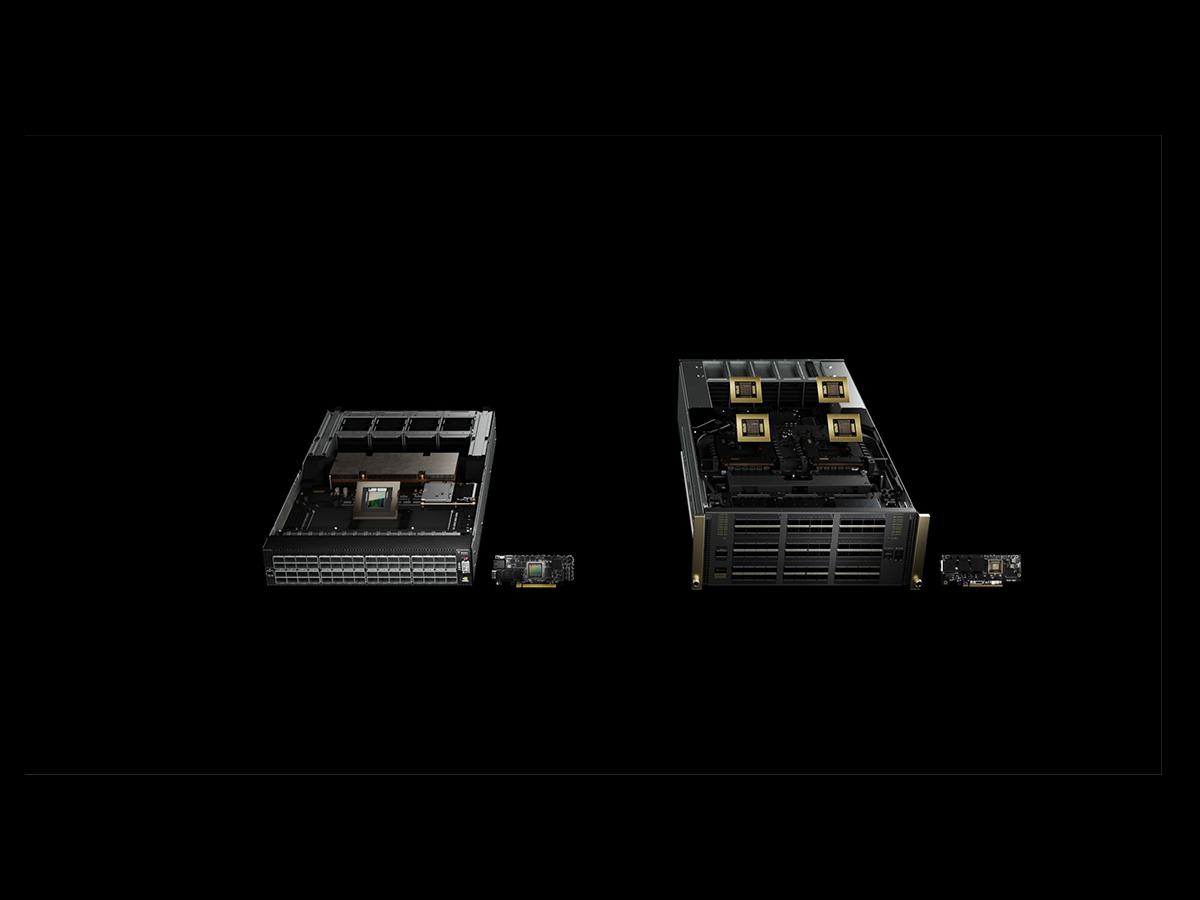

NVIDIA launches the DGX SuperPOD AI supercomputer

NVIDIA announced the next-generation AI supercomputer, the NVIDIA DGX SuperPOD, powered by NVIDIA GB200 Grace Blackwell Superchips, designed for processing trillion-parameter models with constant uptime for superscale generative AI training and inference workloads.

Here’s what you need to know:

- The DGX SuperPOD features a new liquid-cooled rack-scale architecture, built with NVIDIA DGX GB200 systems, providing 11.5 exaflops of AI supercomputing at FP4 precision and 240 terabytes of fast memory, scalable with additional racks.

- Each DGX GB200 system contains 36 NVIDIA GB200 Superchips, comprising 36 NVIDIA Grace CPUs and 72 NVIDIA Blackwell GPUs, connected via fifth-generation NVIDIA NVLink, delivering up to a 30x performance increase over NVIDIA H100 Tensor Core GPU for LLM inference workloads.

- The Grace Blackwell-powered DGX SuperPOD can scale to tens of thousands of GB200 Superchips connected via NVIDIA Quantum InfiniBand, enabling a massive shared memory space for next-generation AI models.

- The new rack-scale DGX SuperPOD architecture includes fifth-generation NVIDIA NVLink, NVIDIA BlueField-3 DPUs, and support for NVIDIA Quantum-X800 InfiniBand networking, offering up to 1,800 gigabytes per second of bandwidth to each GPU.

- Fourth-generation NVIDIA Scalable Hierarchical Aggregation and Reduction Protocol (SHARP) technology in the DGX SuperPOD architecture provides 14.4 teraflops of In-Network Computing, a 4x increase over the prior generation.

- NVIDIA unveiled the NVIDIA DGX B200 system, an air-cooled, traditional rack-mounted AI supercomputing platform for AI model training, fine-tuning, and inference, featuring eight NVIDIA Blackwell GPUs, two 5th Gen Intel Xeon processors, and providing up to 144 petaflops of AI performance.

Read more on the announcement, here.

NVIDIA announces new switches for trillion-parameter GPU computing and AI infrastructure

To support this expanded portfolio, NVIDIA also announced new switches that can handle the increase in load.

Here’s what you need to know:

- NVIDIA announced the new Quantum-X800 InfiniBand for the highest-performance requirements in AI-dedicated infrastructures, capable of end-to-end 800Gb/s throughputs and utilising the NVIDIA Quantum Q3400 switch and the NVIDIA ConnectX-8 SuperNIC.

- It also announced the Spectrum-X800 Ethernet for more widespread AI-optimised networking within data centres, reaching 800Gb/s throughputs utilising the Spectrum SN5600 800Gb/s switch and the NVIDIA BlueField-3 SuperNIC.

- NVIDIA also released increased software support for these new systems to boost AI, data processing, HPC and Cloud workloads.

Read more on the announcement, here.

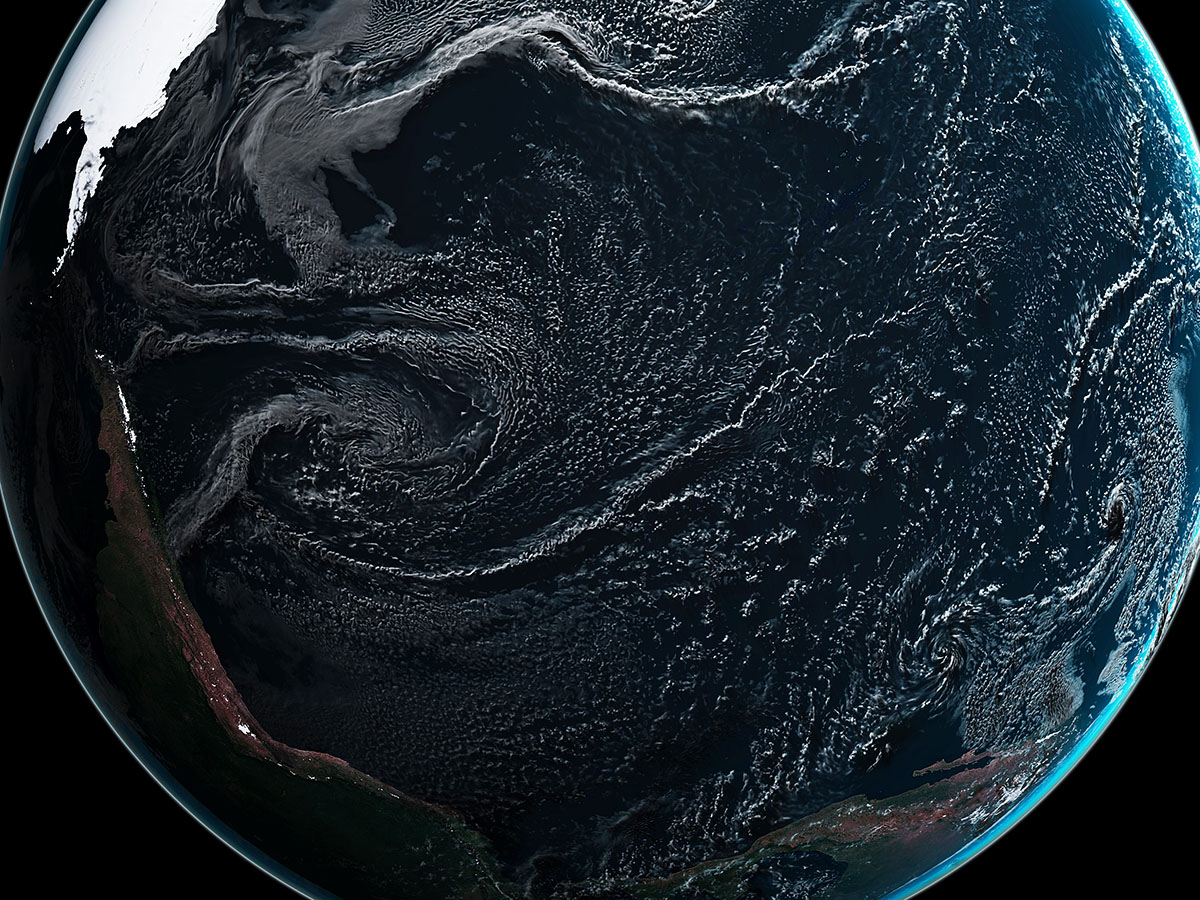

NVIDIA’s new Earth-2 digital twin for climate simulations

NVIDIA announced its Earth-2 climate digital twin cloud platform, designed to simulate and visualise weather and climate at an unprecedented scale.

Here’s what you need to know:

- Earth-2 functions as part of the NVIDIA CUDA-X microservices, providing new cloud APIs on NVIDIA DGX Cloud. These services enable the creation of AI-powered simulations or high-resolution weather events, from global atmospheres to local phenomena like typhoons.

- These APIs can drastically speed up the delivery of interactive simulations, reducing the time from minutes or hours to seconds.

- Earth-2 leverages a new NVIDIA generative AI model, CorrDiff, for diffusion modelling, offering 12.5x higher resolution images than current models, 1,000x faster and 3,000x more energy-efficiently.

Read more on the announcement, here.