DeepMind AI reduces data centre cooling energy use by 40%

Machine learning is useful in our everyday lives, in applications from smartphone assistants to image recognition and translation, but it could also help deal with some of the world’s most challenging physical problems such as energy consumption. While much has been done to stem the growth of energy use, large commercial and industrial systems like data centres consume a lot of energy and there is much more that can be done, especially given the world’s increasing need for computing power.

In a blog post, Rich Evans, Research Engineer, DeepMind, and Jim Gao, Data Center Engineer, Google, said: "Reducing energy usage has been a major focus for us over the past 10 years: we have built our own super-efficient servers at Google, invented more efficient ways to cool our data centres and invested heavily in green energy sources, with the goal of being powered 100% by renewable energy. Compared to five years ago, we now get around 3.5 times the computing power out of the same amount of energy, and we continue to make many improvements each year.

"We are excited to share that by applying DeepMind’s machine learning to our own Google data centres, we’ve managed to reduce the amount of energy we use for cooling by up to 40%."

Several months ago, Google’s data centre team and DeepMind researchers started working together to apply machine learning to their data centres. They used a system of neural networks trained on different operating scenarios and parameters, creating a more efficient and adaptive framework to understand data centre dynamics and optimise efficiency. This was done primarily by training an ensemble of deep neural networks using historical data that had already been collected by thousands of sensors within the data centre (data such as temperatures, power, pump speeds, setpoints, etc.)

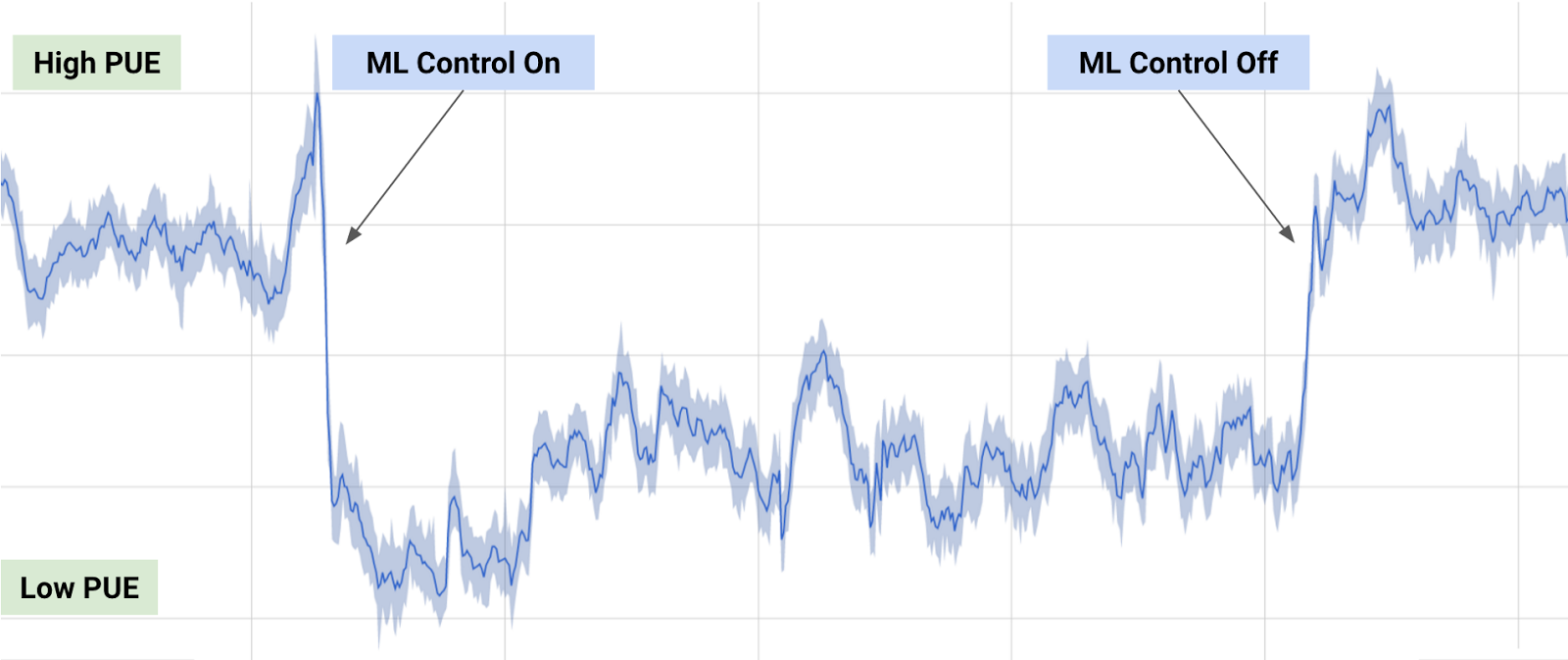

"Since our objective was to improve data centre energy efficiency, we trained the neural networks on the average future PUE (Power Usage Effectiveness), which is defined as the ratio of the total building energy usage to the IT energy usage," the blog post continued. "We then trained two additional ensembles of deep neural networks to predict the future temperature and pressure of the data centre over the next hour. The purpose of these predictions is to simulate the recommended actions from the PUE model, to ensure that we do not go beyond any operating constraints."

Google DeepMind graph showing results of machine learning test on power usage effectiveness in Google data centres

"We tested our model by deploying on a live data centre. The graph shows a typical day of testing, including when we turned the machine learning recommendations on, and when we turned them off. Our machine learning system was able to consistently achieve a 40% reduction in the amount of energy used for cooling, which equates to a 15% reduction in overall PUE overhead after accounting for electrical losses and other non-cooling inefficiencies. It also produced the lowest PUE the site had ever seen," said Evans and Gao.

Google is planning to roll out this system more broadly and will share more details for the benefit other data centre and industrial system operators, and ultimately the environment.