Impending technological innovations of AI in medical diagnostics

Image recognition AI has the potential to revolutionise medical diagnostics. In addition to enabling early disease detection and even the possibility of prevention, it can enhance the workflow of radiologists by accelerating reading time and automatically prioritising urgent cases. However, as IDTechEx has reported previously in its article 'AI in Medical Diagnostics: Key Strategies for Faster Clinical Uptake', image recognition AI's current value proposition remains below the expectations of most radiologists.

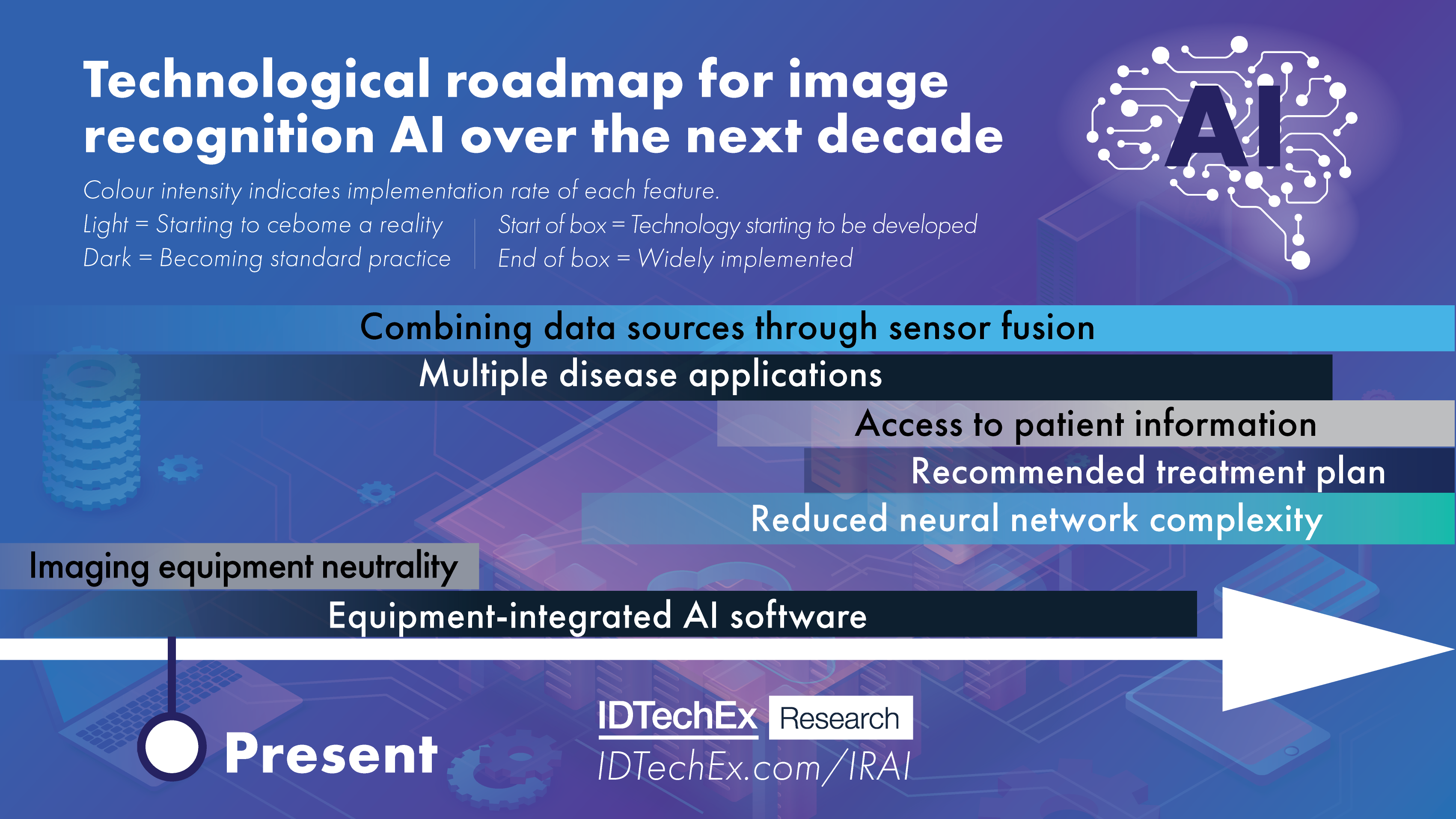

Over the next decade, AI image recognition companies serving the medical diagnostics space will need to test and implement a multitude of features to increase the value of their technology to stakeholders across the healthcare setting. This article discusses key technological issues that AI image diagnostics companies face and provides a roadmap describing when and how they are likely to be overcome over the next decade.

This article is based on research conducted by IDTechEx in the report 'AI in Medical Diagnostics 2020-2030: Image Recognition, Players, Clinical Applications, Forecasts'. Please refer to the report for more information.

Source: 'AI in Medical Diagnostics 2020-2030: Image Recognition, Players, Clinical Applications, Forecasts'

Combining data sources through sensor fusion

Radiologists have a range of imaging methods at their disposal and may need to utilise more than one to detect signs of disease. For example, X-ray and CT scanning are both used to detect respiratory diseases. X-rays are cheaper and quicker, but CT scanning provides more detail about lesion pathology due its ability to form 3D images of the chest. It is sometimes necessary to follow-up a chest X-ray scan with a CT scan to further investigate a suspicious lesion, but AI-driven analysis software can only process one or the other.

To enable efficient analysis of patient scans, image recognition AI software should be able to combine and interpret data from different imaging sources to gain a better perspective of the patient's pathology. This could generate deeper insights into disease severity and progression, thereby providing radiologists with a higher level of understanding of the condition of patients.

Some AI companies are already attempting to train their algorithms using data gathered from different imaging methods into one comprehensive analysis, but this remains a challenge for most. Recognising signs of disease in images from multiple modalities requires a level of training far beyond the already colossal training process for single modality image recognition AI.

From a business perspective, it is currently simply not worth it for radiology AI companies to explore this due to the sheer quantity of data sets, time and manpower required to achieve this. This suggests that sensor fusion will remain an issue for the rest of the coming decade.

Multiple disease applications

Another important innovation will be to apply image recognition AI algorithms to multiple diseases. Currently, many AI-driven analysis tools can only detect a restricted range of pathologies. Their value in radiology practices is hence limited as the algorithms may overlook or misconstrue signs of disease that they are not trained on, which could lead to misdiagnosis. Such issues could lead to a mistrust of AI tools by radiologists, which may in turn reduce their rate of implementation in medical settings.

In the future, AI algorithms will recognise not just one but various conditions from a single image or data set (e.g., multiple retinal diseases from a single fundus image). This is already a reality for numerous radiology AI companies. For example, DeepMind's and Pr3vent's solutions are designed to detect over 50 ocular diseases from a single retinal image, while VUNO's algorithms can detect a total of 12.

Detecting multiple pathologies from the same images requires expert radiologists to provide detailed annotations of each possible abnormality in a photo, and to repeat this process thousands or even millions of times, which is highly time-consuming and thus expensive. As a result, some companies prefer to focus on a single disease. Allocating the resources to achieving multiple disease detection capabilities will be worth it on the long run for AI companies, however. Software capable of detecting multiple pathologies offer much greater value than those built to detect a specific pathology as they are more reliable and have wider applicability. Companies offering single-disease application software will soon be forced to extend their product's application range to stay afloat in this competitive market.

All-encompassing training data

A key technical and business advantage lies in the demonstration of success in dealing with a wide range of patient demographics as it widens the software's applicability. AI software must work equally well for males and females, different ethnicities, etc.

While training DL algorithms to detect a specific disease, the training data should encompass numerous types of abnormalities associated with this disease. This way, the algorithm can recognise signs of the disease in a multitude of demographics, tissue types, etc. and achieve the level of sensitivity and specificity required by radiologists. For instance, breast cancer detection algorithms need to recognise lesions in all types of breast (e.g. different densities). Another example is skin cancer. Historically, skin cancer detection algorithms have struggled to distinguish suspicious moles in dark skin tones as changes in the appearance of the moles are more challenging to identify.

These algorithms must be able to examine moles in all skin types and colours. From an image of a suspicious mole, the software should also be able to recognise the stage of disease progression based on its shape, color and diameter. Otherwise, if an algorithm encounters a type of abnormality that doesn't match any of the conditions it recognises, it will classify it as 'not dangerous' as it doesn't associate it with any condition that it knows. Having a diverse data set also helps to prevent bias (the tendency of an algorithm to make a decision by ignoring options that go against its initial assessment).

Reduced neural network complexity

The architecture of AI models used in medical image analysis today tends to be convoluted, which extends the development process and increases the computing power required to utilize the software. Companies developing the software must ensure that their computing power is sufficient to support customers' activities on their servers, which requires the installation of expensive Graphical Processing Units (GPUs).

In the future, reducing the number of layers while maintaining or improving algorithm performance will represent a key milestone in the evolution of image recognition AI technology. It would decrease the computing power required, accelerate the results generation time due to shorter processing pathways and ultimately reduce server costs for AI companies.

Imaging equipment neutrality

The installation of AI software for medical image analysis can sometimes represent a significant change to hospitals' and radiologists' workflow. Although many medical centres welcome the idea of receiving decision support through AI, the reality of going through the installation process can be daunting enough to deter certain hospitals.

As a result, software providers put a lot of effort into making their software universally compatible so that it fits directly into radiologists' setups and workflows. This will become an increasingly desirable feature of image recognition AI as customers favor software that is compatible with all major vendors, brands and models of imaging equipment. This is, broadly speaking, already a reality as most FDA-cleared algorithms are vendor neutral, meaning that they can be applied to most types of scanner brands and models.

Access to patient information and recommended treatment plan

Today, AI algorithms only have access to medical imaging data. As such, the condition and medical history of patients are unknown to the AI software during the analytical process. Because of this limitation, the software is restricted to locating abnormalities, providing quantitative information and, in some cases, assessing the risk of disease.

While these insights can be highly valuable to doctors, particularly when done faster and more accurately than human doctors, AI can do more. To utilise the full capabilities of AI and provide additional value in medical settings, software developers must focus on post-diagnosis support too. Although this remains a rare aspect of medical image recognition AI as of mid-2020, companies are starting to explore this possibility.

Some skin cancer detection apps such as MetaOptima and SkinVision provide actionable recommendations for further action after an assessment is made. These include scheduling subsequent appointments for follow-up or biopsy or setting reminders next skin checks. Post-diagnosis support is becoming a desirable feature as it complements the doctor's evaluation, almost like a second opinion, and thus provides the doctor with more confidence in their assessment. To learn more, please refer to IDTechEx's report 'AI in Medical Diagnostics 2020-2030: Image Recognition, Players, Clinical Applications, Forecasts'.

Ultimately, doctors seek a solution that aids them to establish viable treatment strategies. To achieve this, the software needs information relating to patients' electronic health records, clinical trial results, drug databases and more. This goes beyond simple image recognition. Most companies currently have no confirmed plans to address this. Implementation of these systems will remain a work in progress for the next decade and beyond due to technical challenges caused by the overlap and interoperability required between various hospital and external databases.

Equipment-integrated AI software

The idea of integrating image recognition AI software directly into imaging equipment (e.g. MRI or CT scanners) is gaining momentum as it would facilitate the automation of medical image analysis. In addition, it avoids problems with connectivity as no cloud access is required. This is being done more and more - recent examples include Lunit's INSIGHT CXR software integration into GE Healthcare's Thoracic Care Suite and MaxQ AI's Intracranial Haemorrhage (ICH) technology being embedded into Philips' Computed Tomography Systems.

A downside of integrating AI software into imaging equipment is that the hospital/radiologist has no flexibility to choose the provider/software that best suits their needs. The value of this approach depends on the performance level and capabilities of the integrated AI software, and if it matches the user's requirements. If that is not the case, hospitals are likely to favor cloud-based software.

From the equipment manufacturer's point of view, the business advantage of integrating image recognition AI into their machines is clear. The enhanced analytical capabilities provided by the AI software would give OEM manufacturers a competitive edge as they render the machines more appealing to hospitals seeking to boost revenues by maximizing the number of patients seen every day.

From a software provider's perspective, the situation is less clear. AI radiology companies are currently considering the advantages of entering exclusive partnerships with manufacturers versus making their software available as a cloud-based service. IDTechEx expects a divide to arise among AI radiology companies in the next 5-10 years. Some will choose the safe option of selling their software exclusively to large imaging equipment vendors due to the security that long-term contracts can provide. Others will lean more towards continuing with the current business model of catering directly to radiology practices.

IDTechEx's report 'AI in Medical Diagnostics 2020-2030: Image Recognition, Players, Clinical Applications, Forecasts' provides a detailed analysis of emerging solutions and innovations in the medical image recognition AI space. It cuts through the technological landscape from both a commercial and technical perspective by benchmarking the products of over 60 companies across 12 disease applications according to performance, market readiness, technical maturity, value proposition and other factors. In-depth insights into the company and market landscape are also provided, including ten-year forecasts for each application.