Making 4G networks IoT-ready

Most Internet of Things (IoT) devices will communicate using wireless machine-to-machine communications technologies. Currently 2G and 3G technologies dominate the cellular IoT market, but the future belongs to the fourth-gen with NB-IoT (narrowband IoT) and eMTC (enhanced Machine Type Communications). Joerg Koepp, Technology Marketing at Rohde & Schwarz, explains.

4G networks will enable mobile operators to address a wider share of the wireless IoT market. Features such as Power Saving Mode (PSM), extended Discontinuous Reception (eDRX) cycles and Coverage Enhancement (CE) can tune the wireless interface to the needs of IoT applications. To meet the specific performance and availability requirements, all communications layers must work together perfectly. This creates a need for end-to-end application testing to optimise performance parameters, for example power consumption and reaction times.

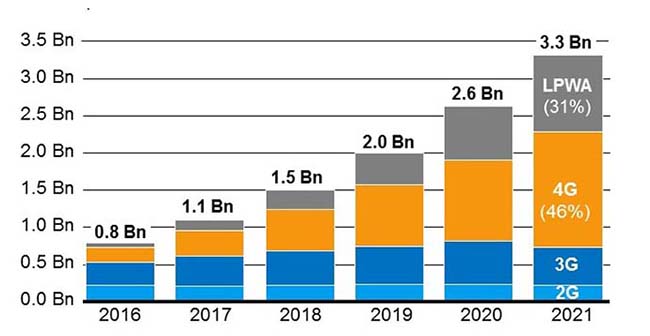

About 60% of today’s cellular IoT devices use 2G or 3G technologies (see Figure 1 - below). Typical applications include fleet management, ATM banking services and personal health monitoring, which typically generate little data traffic.

Because 4G LTE is optimised for mobile broadband, IoT applications generated negligible demand for LTE over the last few years. Some aspects of LTE, however, make it increasingly attractive. LTE offers advantages in spectral efficiency, latency and data throughput. Another is global accessibility - according to GSMA, 4G LTE networks covered more than 60% of the global population in 2016. By the end of the decade, developing countries are expected to reach 60% coverage. The long term availability of LTE is another consideration. 2G has been around for over 25 years, and operators are considering whether to discontinue the service. Therefore, the industry is looking for LTE solutions competitive with 2G in terms of cost, power consumption and performance.

3GPP standardisation for IoT

Optimisations for Machine Type Communications (MTC) have been developed within the 3GPP framework. One example of optimisation is protecting the network when several thousand devices try to connect simultaneously, which would occur when the power grid comes back after a power failure. Overload mechanisms for reducing signalling traffic have been introduced to handle extreme signalling loads. Many IoT applications (sensor networks are an example) send data infrequently and need not operate to the second.

These devices can be configured to accept longer delays during the connection set-up (delay tolerant access). Rel. 10 includes a process permitting the network to reject connection requests initially and delay resubmission (extended wait time). With 3GPP Rel. 11, access can be controlled by setting up classes. The network transmits an extended access barring (eab) bitmap that identifies which classes are permitted access. These processes ensure reliable and stable operation of IoT applications without endangering the mobile broadband service.

Low cost, low power devices

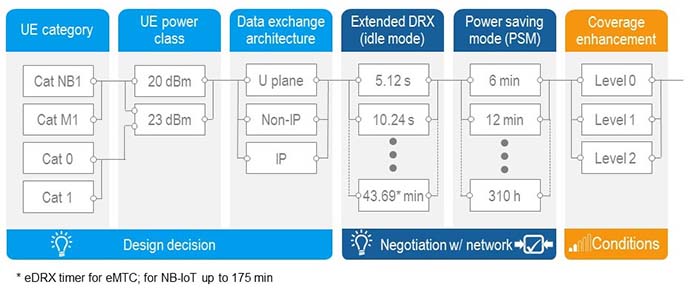

In Rel. 12, the 3GPP committee started working on optimised solutions addressing requirements such as low data traffic, low power consumption and low costs. It quickly became clear that there is no single, simple solution. The requirements for applications such as container tracking, waste bin management, smart meters, agricultural sensors and sports and personal health trackers are too varied. Rel. 12 concentrated on reduced power consumption and cost effective modems. The results were a PSM which is important for battery operated devices, and a new LTE device category 0, which targets 50% less complexity than an LTE category 1 modem.

PSM is a kind of deep sleep mode. The receiver is literally switched off for a while so that the device is not accessible via paging, but the modem is still registered on the network. Consequently, PSM is not suitable for applications that expect a time critical reaction. Applications that use PSM must tolerate this behaviour and the design process must include the specification of optimal timer values for idle mode and power saving mode.

LTE category 0 was a first attempt at permitting significantly less expensive LTE modems. Modem complexity was reduced by reducing the data rate down to 1Mbps. Manufacturers can also eliminate full duplex mode. Thus, the device does not require duplex filters to prevent transmitter/receiver interference.

Rel 13 introduced LTE category M1 as part of the work on eMTC, which added cost reduction measures, especially lower bandwidths in the uplink and downlink, lower data rates and reduced transmit power.

NB-IoT was developed in parallel with LTE category M1. Its profile included extremely low power and costs but improved reception in buildings and support for devices with very little data traffic. The LTE category NB1 has a 180kHz bandwidth and can be deployed in unused LTE resource blocks, free spectrum between neighbouring LTE carriers (guard band) or standalone such as unused GSM carriers.

eDRX is another power reduction feature introduced in Rel. 13. For example, in idle mode, the modem periodically goes into receive mode to obtain paging messages and system status information. The DRX timer determines how often this occurs. Currently, the shortest interval for the idle DRX timer is 2.56 seconds. That is fairly frequent for a device that expects data only every 15 minutes and has relaxed delay requirements, for example. eDRX allows a much longer time interval of up to 2.9 hours depending on the application requirements and the network support.

PSM and eDRX differ in the time allowed to remain in the sleep mode and in the procedure to switch into receive mode. To be reachable again, a device using PSM must first go into active mode. A device using eDRX can stay in idle mode and go directly to receiver mode without additional signalling.

eMTC and NB-IoT also offer coverage enhancement features for use cases such as smart meters. One principle is redundant transmission – sending data repeatedly over time. This, of course, has a negative impact on power consumption.

Based on more concrete market requirements, 3GPP drives further improvements with feMTC and eNB-IoT. In Rel. 14 we can expect two new LTE categories - Cat NB2 and Cat M2. Important topics addressed are, for example, higher data rates, lower latency, better positioning, multi-cast capabilities to allow software upgrades and improvements for VoLTE over Cat M devices.

End-to-end application testing

Theoretical calculations about battery lifetime are useful, but systems can behave differently in reality, and behaviours can also change over time. The overall communications behaviour of the end-to-end application – including communications triggers (client initiated, server initiated, periodic), delay requirements, network configuration, data throughput and mobility – needs to be considered (see Figure 2 - above).

The challenge for developers is to use PSM and eDRX in the most efficient way. This requires analysis of anything that influences power consumption, beginning with both device and server-side applications. Also included are the behaviours of the mobile network and IP network.

RF performance, battery consumption, protocol behaviour and application performance should also be considered. Initial work can be done on paper, but it is very useful to verify the results under controlled, simulated, yet realistic network conditions. This verifies model assumptions and reveals the impact of real world conditions. Scenarios in which the network does not support a feature or uses different timers can be verified.

A unique test solution

Manufacturers of test and measurement equipment are addressing the growing demand for test, verification and optimisation of end-to-end applications, which goes beyond RF and protocol testing. Rohde & Schwarz offers a solution based on the R&S CMW500/290 multi-radio communication test platform, the R&S CMWrun sequencer tool and the R&S RT-ZVC02/04, a multi-channel power probe (above right). It gives users a view of different parameters such as mobile signalling traffic, IP data traffic or power consumption on one platform. The platform simultaneously emulates, parameterises and analyses wireless communications systems and their IP data throughput – something that cannot be done in real networks.

R&S CMWrun allows straightforward configuration of test sequences without requiring programming knowledge for controlling the instrument remotely. It also provides full flexibility when configuring parameters and limits for the test items. One of the key differentiators of this solution is the intuitive way the user can combine and run applications in parallel with common event markers out of signalling or IP activities. The solution is able to show the power consumption based on very accurate power measurements from up to four independent measurement channels.

For example, in end-to-end application tests, synchronised traces show current drains and IP data throughput. During analysis, synchronised event markers indicating signalling events or IP status updates are displayed in both graphs. This ensures a deeper testing level where the user can see the impact of a signalling or IP event on the current drain and IP throughput, making it easier to understand the dependencies and to optimise the application parameters.

The starting point could be to consider overall communications behaviour, e.g. number of IP connections, transmitted messages or communications and signalling events. Moreover, it could be interesting to see the power consumption in different activity states, or in eDRX or PSM status as well as the power consumption of the different power domains of the device. Later it would be useful to tune the related parameters for eDRX or PSM and probably application behaviour. Finally, it could be helpful to analyse different scenarios reflecting possible real world situations. End-to-end application testing is therefore becoming more and more important for meeting such challenging application requirements as a ten year battery lifetime.