Cobots are set to revolutionise manufacturing

Are cobots working alongside, rather than replacing humans, the answer to today’s manufacturing challenges? Ian Ferguson, VP of Marketing and Strategic Alliances at Lynx Software Technologies looks at how such an approach can be made safe and secure.

Before the COVID pandemic, there was a lot of discussion about the threat that robots would provide to jobs, particularly in manufacturing environments. During the pandemic, robots were deployed for use cases that helped humans separated from each other, an example being the delivery of medications to patients in hospitals. This has led to increased acceptance of automated platforms.

Some tasks are deemed as too dangerous or laborious and repetitive for humans (example - inventory counting) to carry out and this is where robots can deliver immense improvements in efficiency and accuracy. Despite all the claims around the capabilities of artificial intelligence, systems do lack critical thinking and creativity. I think, therefore, that as opposed to an either/or situation we will see an increasing number of use cases where humans work closely with a robot to create products or perform tasks together.

The majority of industrial robots deployed in manufacturing facilities are expensive, static, large and perform a specific function very effectively. The ROI calculations are such that these systems are only deployed in large scale facilities. We will see these systems add vision systems, additional sensors and machine learning so they can make some adjustments to how they perform a specific task (example – correcting poor spot welds).

There is a lot of excitement around the new class of collaborative robots, also known as ‘cobots’, which will be more configurable, more cost effective and, in some cases, mobile. Rather than repeating one task tens or hundreds of millions of times, these systems can be reprogrammed to support small-, mid-sized production runs. Unlike traditional industrial robots which are isolated from people, cobots work ‘hand-in-hand’ with human workers. What is going to be key is rethinking manufacturing (and indeed logistics) processes to harness the strengths of the robot and the human.

There are three main elements of technology that need to be addressed to enable this revolution, namely:

System cost reduction

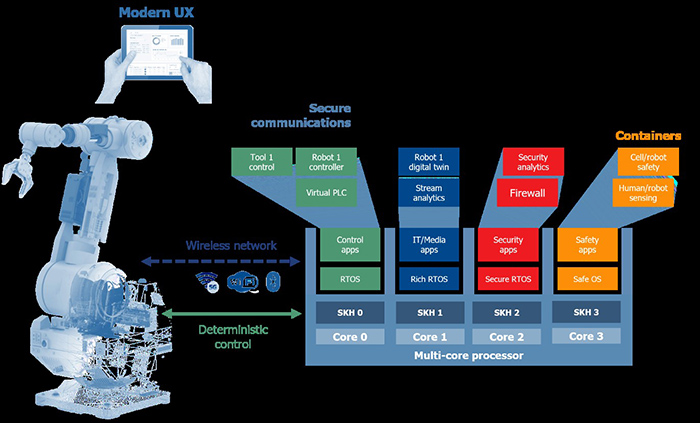

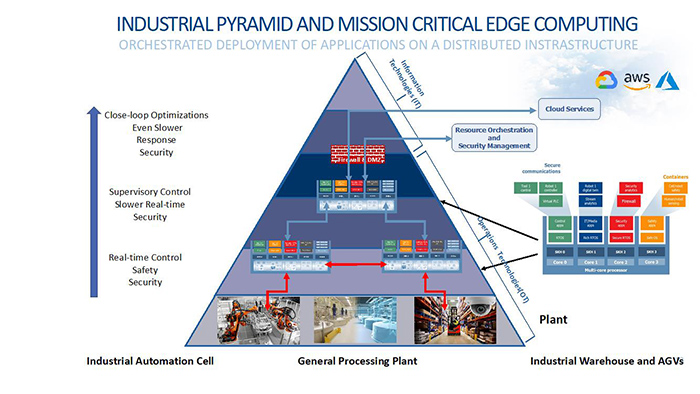

The drive to reduce cost, power and footprint of electronics is shrinking functionality that used to be implemented on multiple systems onto a single consolidated board (and in an increasing set of cases, a single heterogenous multicore processor).

Impact: These systems need to run rich operating systems like Linux and Windows while also guaranteeing the real-time behaviour of it-simply-must-always-respond-this-way elements of the platform. These systems are referred to as mixed criticality systems.

Real-time systems based on a single core processor (SCP) are well understood in the industry, which has adopted a real-time system engineering process built on the constant worst case execution time (WCET) assumption. This states that the measured worst case execution time of a software task when executed alone on a single core remains the same when that task is running together with other tasks.

This fundamental assumption is the foundation for the schedulability analysis, determining that a scheduling sequence can be found that completes all the tasks within their deadlines. This approach breaks on multi-core processors since system resources like memory and IO are shared between applications. This resulting ‘interference’ can cause spikes in worst cases execution times.

Safety and security

Both of these aspects are important. In most of the manufacturing environments, it is likely that the cobot will not be directly connected to the outside world. These systems will be connected inside the factory and there needs to be protection against hacks caused either accidentally or deliberately from someone or something gaining access to the cobot directly or via a network. I think the primary focus here is one of safety. Software is not perfect. Hardware can fail.

Impact: Since humans are now in closer proximity to the robot, the primary system challenge for architects to solve is in ensuring that these systems are safe in all situations. The system architecture needs to isolate applications from each other so that a problem with one, caused either accidentally or from a malicious attack, will not result in the system misbehaving in a way that endangers life.

Applications should not share areas of memory. Importantly there should be no reliance on a single operating system to manage the hardware resources. This responsibility should be distributed to improve the resilience of the system to failures. Hypervisor technology is increasingly being used to provide this separation. System manufacturers are also implementing code that cannot be directly accessed (and therefore potentially be modified) by third party applications.

Localised decision making

For latency, network availability and privacy reasons, robots will usually use local computing resources as opposed to accessing the cloud.

Impact: Many are now using the phrase ‘edge computing’ for this. This is really the first place back from where the data is created, where decisions are made on multiple streams of information. This might be in the robot itself or it might be in an on-premise server blade or gateway. Increasingly, this will blend data from the information technology (IT) with operational technology (OT). These networks have historically been kept separate. The ability to combine information from the robot and its surroundings can be used to improve system reliability and reduce scrap.