Spearheading the autonomous driving revolution

At the recent electronica exhibition, Electronic Specifier Editor Joe Bush caught up with Chris Jacobs, Vice President, Autonomous Transportation & Safety at Analog Devices (ADI), to find out what technologies the company are focusing on in its drive towards the next level of autonomous driving.

Automotive certainly occupies a central role in ADI’s business. With 25 years’ experience in the sector and with around $1bn of the $6bn company devoted to it, ADI is well placed within a fast paced industry. Way back in 1993 ADI introduced the first monolithic MEMS airbag sensor two years before the US Congress made airbag sensors mandatory on all new automobiles. The company also has over 15 years’ experience in 24GHz and 77/79GHz automotive RADAR.

Automotive certainly occupies a central role in ADI’s business. With 25 years’ experience in the sector and with around $1bn of the $6bn company devoted to it, ADI is well placed within a fast paced industry. Way back in 1993 ADI introduced the first monolithic MEMS airbag sensor two years before the US Congress made airbag sensors mandatory on all new automobiles. The company also has over 15 years’ experience in 24GHz and 77/79GHz automotive RADAR.

“What we do is try and invest in front of the curve,” said Jacobs. “Automatic emergency braking for example, will be a standard fitment on automobiles in 2022 – which is a RADAR-based solution.”

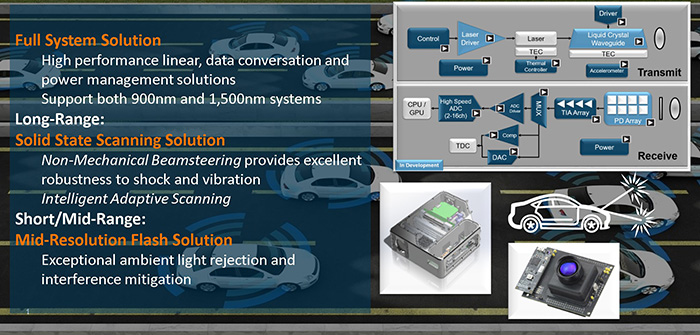

ADI’s investment strategy has been bolstered by two acquisitions in recent years. In 2016 the company purchased Vescent Photonics, a developer of a liquid crystal beam steering technology. Jacobs added: “It’s a fully solid state beam steering technology that is unlike MEMS mirrors – it’s not a optical phased array. It is based largely on the LCD technology that you see in your monitors today and this will allow us to hit a reasonable manufacturing price point in the future.”

Then earlier this year ADI acquired industrial RADAR company Symeo, based in Germany, which develops technology that ADI feel will be appropriate for automotive applications.

ADAS today and tomorrow

If you look at the technology on the average car today, it will feature heavy ultrasonic content for parking, and perhaps a backup camera. There are passive safety MEMS devices for crash sensing and a gyroscope for stability control. Perhaps there will also be front facing RADAR for adaptive cruise control if you’re prepared to fork out the few-thousand dollars for that particular function.

If we look into the future however, we see a very different picture. The environment which vehicles are being asked to cope with is going to become a lot more complicated. Jacobs continued: “As a result, we are seeing a huge increase in the number of sensors in the car. I think that RADAR is going to become the utility player in this area over the next decade. In the past a lot of the investments, patents and papers have focused on camera technology, image processing etc which has brought about a huge leap forward in terms of capability.

“In the next decade I think you’ll see a lot more innovation in RADAR, addressing issues that cameras can’t. Cameras can obviously read signs and see colours but at night or in inclement weather they face challenges.”

There are current challenges for LIDAR around cost, power, and reliability but Jacobs predicated that by the mid-2020s the technology will occupy a much stronger position in the market as it is ideal for long range scanning applications.

“If you think of it as a beam steering technology where you have a degree of freedom in X and Y you can get a very fine resolution image at a long distance, or alternatively, a lower resolution image with a wider field of view and at shorter range. And this technology will be able to scale with different use cases.

Scanning technology has an advantage over flash technology. In flash systems the laser has to illuminate the entire scene. Given the limits of output power this leads to shorter range performance. In scanning systems you focus the light on a very small area, so with similar output power greater ranges are achieved.

Looking at the future Jacobs’ message was that no one sensor can do everything. There are different quality metrics associated with what the different sensor modalities can achieve. However, he did highlight three key technologies that he believes are going to be enablers for autonomous driving.

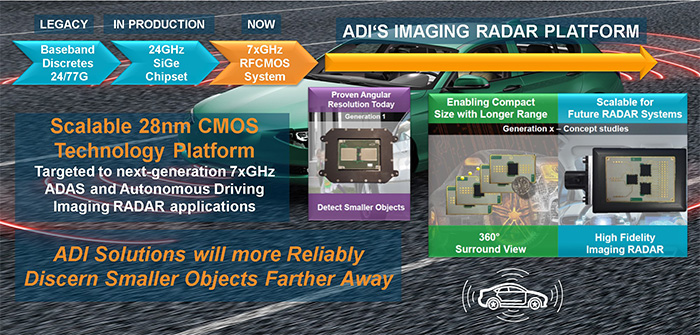

Imaging RADAR

The idea behind this technology is that the RADAR images start to become more like camera images and feature a lot more clarity. “We set up an example with a tripod and a cylinder,” added Jacobs. “We illuminated the scene with a RADAR that was not an imaging RADAR and the cylinder and tripod were merged together. This has an angular resolution of anywhere between 10 to 20 degrees. Why is this bad? Well, if you had a child next to a truck it would not show the difference.”

By implementing imaging RADAR techniques however, ADI were able to see the differentiation between these objects. In turn this means that the system can start assessing smaller objects in the presence of larger ones, and things like the intent of the object can be ascertained.

Jacobs continued: “Many of these current systems are built on cascading multiple chips and many antennas. In the long run that’s not going to work. If you want to place it behind the bumper for example, there are different techniques that you have to put in place to keep the hardware load to a reasonable amount but still achieve the required level of resolution.

“You can see in the industry today many people are adopting a brute force approach – i.e. just putting more channels down. That’s not a sustainable position. There has to be a more algorithmic approach so the hardware can be kept to a reasonable level which will then allow a lot of the synthesis to be done on the software side.”

LIDAR

This is an area where ADI are investing quite aggressively with a particular focus on 1,500nm. “This is a wavelength of light which is by and large unused today,” added Jacobs. Many current systems are built on 900nm largely because there are silicon photodiodes available, less expensive lasers, infrared etc.

“However, by pushing towards 1,500nm you achieve a different dynamic. 900nm is very near the visual spectrum of light so when you see these 900nm systems there is a small red dot. The nature of that light is that it’s focused on your retina, so there’s only a certain amount of output power that you can put on those lasers.”

At 1,500nm it’s a different dynamic as the light is absorbed across the whole surface of the eye. It’s out of the visual spectrum of light and so the pulse energy is around 40,000x higher for the same eye safety levels. What does that translate into – roughly you get four times the range for the same level of reflectivity.

Jacobs continued: “So when you hear people saying things like ‘my system does 100 metres for LIDAR’, you always have to ask ‘at what reflectivity?’ It’s easy to see a car at 100 metres because it’s made of metal and it reflects (around 80% reflective). But can you see a tyre for example, which absorbs light – you have to think about the remaining 20%.”

1,500nm LIDAR has around 70% less spectral irradiance so it is much better in ambient light conditions. “We’ve done a lot of analysis and 1,500nm LIDAR is much better at detecting things like haze, smoke, dust and fine aerosols,” Jacobs added. “There are still challenges with things like reflective particles etc. It’s a photonics system so with environments like snow and rain there are still issues to resolve. But 1,500nm addresses some of the more fundamental problems whether it’s range, or robustness in the sun etc.”

The question then of course is why isn’t everyone using 1,500nm? Jacobs continued: “The lasers are very expensive and the photo detector technology is export controlled – it’s manufactured on two inch wafers and based on indium gallium arsenide, so the technology is just not viable in most instances.”

Therefore, as Jacobs explained, the real game changer will be to develop a photo detector technology that is manufactured in an automotive qualified process with larger wafers that get good dark current, and low capacitance. “I think that once the industry moves towards 1,500nm it’s going to be a pretty significant shift in the market,” he added.

And it’s in this technology that Jacobs has noticed a real shift in the market. “We’ve done a lot of work on the proof of concept of these types of systems but it should be noted that we are a tier 2, not a tier 1. However, the nature of the industry has meant that we’ve been asked to contribute to the market discussion at the module level.

“In other words, ten years ago I would show up to a customer with a bag of chips, and they would take care of everything else. The world has changed. Now I’m being asked specifically ‘does your RADAR see the bike, next to the guardrail, under the bridge’. So I need to be able to run those use cases and all of our technology is now embedded in those car mounted systems so we understand what the system is asking us to do and we can then inform our semiconductor design as a part of that.”

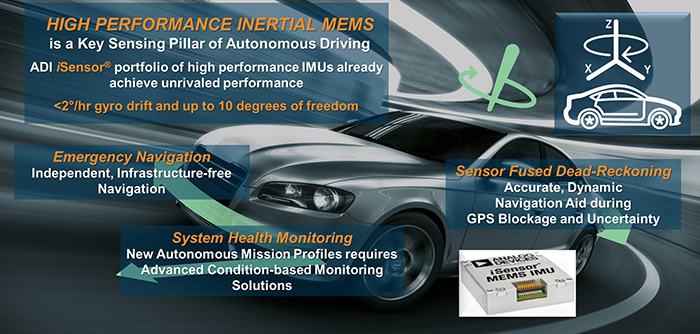

Inertial Measurement Units (IMU)

As Jacobs explained, these are often the unsung heroes of a lot of Level 4 and highly autonomous driving systems. IMUs are inertial products that largely support navigation functions. Today you will have an electronic stability control (ESC) gyro in your car which handles vehicle dynamics. So when you go round a corner it will dynamically allocate the power to the wheels.

He added: “We ran an experiment around a three mile loop in the Boston area and we used the ESC-Grade gyro and asked, if you were only using that for navigation, how would the vehicle operate? In the experiment the car started to drift – all gyros drift over time off-centre.

“However, with our autonomous grade IMU, even uncalibrated, it largely stayed on path. This is the same technology that is used in military and aerospace applications, for example in missile guidance systems (or avionics with auto-pilot applications). We’ve had these products for ten years – and now we’re bringing them into the automotive space.

“What’s interesting is that a lot of people don’t really understand this technology as everyone is focusing on RADAR, LIDAR, cameras etc. But a primary use case where this technology is valuable would be if your car went into a tunnel. The lighting conditions will change dramatically and there will be a period of time in which the Kalman filter in your camera system will become unstable until it adjusts to the new light.

“Likewise the RADAR system could be dealing with multi-path effects with signals bouncing off the walls so again there will be a period of time where your perception machine may become unstable. So what’s keeping you in your lane? It’s your precision map and your IMU.”