Machine learning code in an embedded IoT node

Internet of Things (IoT) networks operating in dynamic environments are being expanded beyond object detection to include visual object identification in applications such as security, environmental monitoring, safety, and Industrial IoT (IIoT). Rich Miron, Applications Engineer at Digi-Key Electronics explains.

As object identification is adaptive and involves using machine learning (ML) models, it is a complex field that can be difficult to learn from scratch and implement efficiently.

The difficulty stems from the fact that an ML model is only as good as its data set, and once the correct data is acquired, the system must be properly trained to act upon it in order to be practical.

This article will show developers how to implement Google’s TensorFlow Lite for Microcontrollers ML model into a Microchip Technology microcontroller. It will then explain how to use the image classification and object detection learning data sets with TensorFlow Lite to easily identify objects with a minimum of custom coding.

It will then introduce a TensorFlow Lite ML starter kit from Adafruit Industries that can familiarise developers with the basics of ML.

ML for embedded vision systems

ML, in a broad sense, gives a computer or an embedded system similar pattern recognition capabilities as a human. From a human sensory standpoint this means using sensors such as microphones and cameras to mimic human sensory perceptions of hearing and seeing. While sensors are easy to use for capturing audio and visual data, once the data is digitised and stored it must then be processed so it can be matched against stored patterns in memory that represent known sounds or objects. The challenge is that the image data captured by a camera for a visual object, for example, will not exactly match the stored data in memory for an object. A ML application that needs to visually identify the object must process the data so that it can accurately and efficiently match the pattern captured by the camera to a pattern stored in memory.

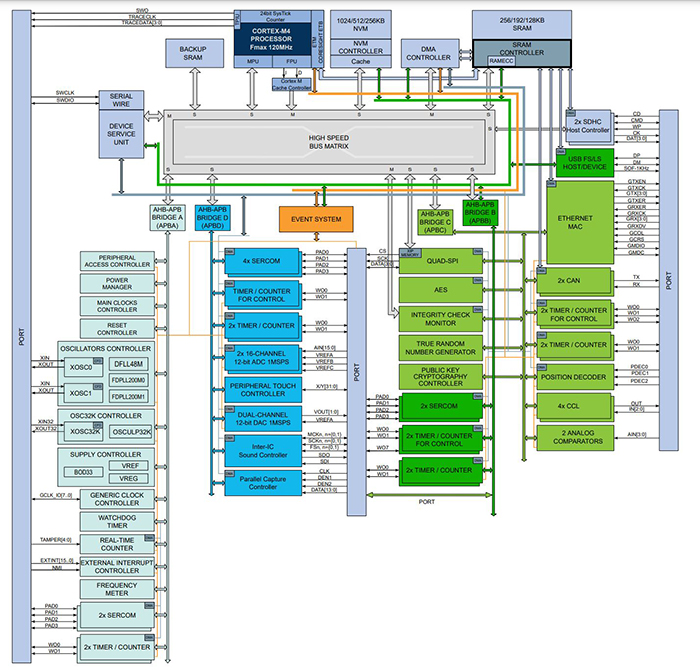

Above: Figure 1. The ATSAMD51J19A is based on an Arm Cortex-M4F core running at 120MHz. It is a full-featured microcontroller with 512 Kbytes of flash and 192 Kbytes SRAM. (Image source: Microchip Technology)

There are different libraries or engines used to match the data captured by the sensors. TensorFlow is an open-source code library that is used to match patterns. The TensorFlow Lite for Microcontrollers code library is specifically designed to be run on a microcontroller, and as a consequence has reduced memory and CPU requirements to run on more limited hardware. Specifically, it requires a 32-bit microcontroller and uses less than 25 kilobytes (Kbytes) of flash memory.

However, while TensorFlow Lite for Microcontrollers is the ML engine, the system still needs a learning data set of the patterns it is to identify. Regardless of how good the ML engine is, the system is only as good as its learning data set, and for visual objects some of the learning data sets can require multiple gigabytes of data for many large models. More data requires higher CPU performance to quickly find an accurate match, which is why these types of applications normally run on powerful computers or high-end laptops.

For an embedded systems application, it should only be necessary to store those specific models in a learning data set that are necessary for the application. If a system is supposed to recognise tools and hardware, then models representing fruit and toys can be removed. This reduces the size of the learning data set, which in turn lowers the memory needs of the embedded system, thus improving performance while reducing costs.

An ML microcontroller

To run TensorFlow Lite for Microcontrollers, Microchip Technology is targeting machine learning in microcontrollers with the Arm Cortex-M4F-based ATSAMD51J19A-AFT microcontroller (Figure 1). It has 512 Kbytes of flash memory with 192 Kbytes of SRAM memory and runs at 120 megahertz (MHz). The ATSAMD51J19A-AFT is part of the Microchip Technology ATSAMD51 ML microcontroller family. It is compliant with automotive AEC-Q100 Grade 1 quality standards and operates over -40°C to +125°C, making it applicable for the harshest IoT and IIoT environments. It is a low-voltage microcontroller and operates from 1.71V to 3.63V when running at 120MHz.

The ATSAMD51J19A networking options include CAN 2.0B for industrial networking and 10/100 Ethernet for most wired networks. This allows the microcontroller to function on a variety of IoT networks. A USB 2.0 interface supports both host and device modes of operation and can be used for device debugging or system networking.

A 4 Kbyte combined instruction and data cache improves performance when processing ML code. A floating point unit (FPU) is also useful for improving performance of ML code as well as processing raw sensor data.

Storing learning data sets

The ATSAMD51J19A also has a QSPI interface for external program or data memory storage. This is useful for extra data storage of learning data sets that exceed the capacity of the on-chip flash memory. The QSPI also has eXecute in Place (XiP) support for external high-speed program memory expansion.

The ATSAMD51J19A also has an SD/MMC memory card host controller (SDHC) which is very useful for ML applications as it allows for easy memory swap of ML code and learning data sets. While the TensorFlow Lite for Microcontrollers engine can run in the 512 Kbytes of flash on the ATSAMD51J19A, the learning data sets can be upgraded and improved on a regular basis. The learning data set can be stored in an external QSPI flash or QSPI EEPROM, and depending on the network configuration, can be upgraded remotely over the network. However, for some systems it can be more convenient to physically swap out a memory card with another that has an improved learning data set. In this configuration the developer needs to decide if the system should be designed to hot-swap the memory card, or if the IoT node needs to be shut down.

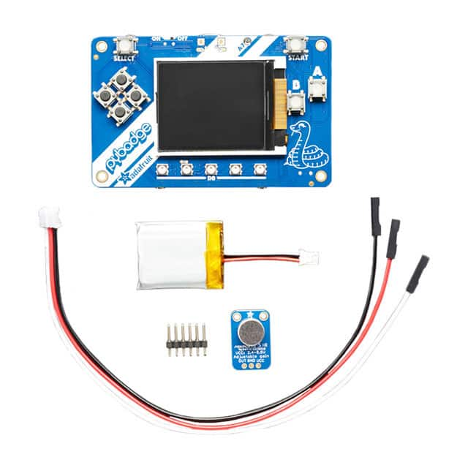

Above: Figure 2. The Adafruit Industries 4317 TensorFlow Lite for Microcontrollers development kit comes with a color TFT LCD for development and can display the results of ML operations. (Image source: Adafruit Industries)

If the IoT node is extremely space constrained, then instead of using external memory it would be advantageous to put as much of the application as possible into the microcontroller memory. The Microchip Technology ATSAMD51J20A-AFT is similar to and is pin compatible with the ATSAMD51J19A, except it has 1Mbyte of flash and 256 Kbytes of SRAM, providing more on-chip storage for learning data sets.

Developing with TensorFlow Lite

Adafruit Industries supports development on the ATSAMD51J19A with the 4317 TensorFlow Lite for the Microcontrollers development kit (Figure 2). The board has 2 Mbytes of QSPI flash which can be used to store learning data sets. The kit comes with a microphone jack for ML audio recognition. Its 1.8 inch colour 160x128 TFT LCD can be used for development and debugging. The display can also be used for voice recognition demos when TensorFlow Lite for Microcontrollers is used with a voice recognition learning data set. As the application recognises different words, they can be displayed on the screen.

The Adafruit Industries kit also has eight pushbuttons, a three-axis accelerometer, a light sensor, a mini-speaker, and a lithium-poly battery. The USB 2.0 port on the ATSAMD51J19A is brought out to a connector for battery charging, debugging, and programming.

The Adafruit kit comes with the latest version of TensorFlow Lite for Microcontrollers. Learning data sets can be loaded using the USB port into the ATSAMD51J19A microcontroller’s 512 Kbytes of flash memory or loaded into the external 2 Mbytes QSPI memory.

For image recognition evaluation, the TensorFlow object detection learning set can be loaded onto the development board. The development board has ports for connecting to the microcontroller’s parallel and serial ports, many of which can be used to connect to an external camera. With the object detection learning set loaded on the microcontroller, the LCD can be used to show the output of the results of the object detection ML processing, so if it recognises a banana, the TFT display might display the objects recognised, along with a percent confidence. An example results display might show as:

Banana: 95%

Wrench: 12%

Eyeglasses: 8%

Comb: 2%

For development of IoT object detection applications, this can speed development and help diagnose any incorrect detection results.

Conclusion

ML is an expanding field that requires specialised skills to develop microcontroller engines and models from scratch and implement them efficiently at the edge. However, using an existing code library such as TensorFlow Lite for Microcontrollers on low-cost, highly efficient microcontrollers or development boards saves time and money, resulting in a high-performance ML system that can be used to detect objects in an IoT node quickly, reliably, and efficiently.