Embedded machine learning for industrial applications

Over the last few years, there have been countless predictions of how our lives are about to be transformed by artificial intelligence (AI) and machine learning (ML). All this comes at a cost, with the worldwide market forecast to grow to an eye-watering $554 billion by 2024.

By Mark Patrick, Mouser Electronics

Manufacturing and the industrial sector are no exception, and AI/ML is becoming a crucial part of the industrial digital transformation. Computers in factories are far from being a new trend, with near-ubiquitous programmable logic controllers (PLC) and well-established protocols such as SCADA. However, the industrial internet of things (IIoT) means we now have sensors on the production line generating ever-increasing amounts of data. AI and ML provide the way to exploit all this information to drive efficiencies and improvements – helping us move to Industry 4.0.

So much for the hype and the jargon. How can engineers actually apply AI/ML to achieve real, measurable benefits? Is the technology sufficiently mature to justify its rollout on the factory floor, or is it still early days?

Let’s start by clarifying one crucial point: ML is not the same as AI. While definitions vary, there's a consensus that AI refers broadly to a range of approaches to getting computers to think. On the other hand, ML can be more narrowly defined as enabling computers to automatically learn and improve from working with data, as opposed to a human designing all aspects of a program or solution. In ML, we construct algorithms, and then our system can learn by making predictions and seeing how accurate they are.

In industry, ML is finding uses in a whole range of areas, from preventative maintenance to optimising process efficiency, to straightforward but vital tasks such as managing when replacement parts and consumables need ordering. For example, a machine tool may have multiple temperature and vibration sensors. An ML system can learn when the data from these sensors indicates a part is worn or out of adjustment, and a failure is likely soon. This is achieved either by adding sensors to existing equipment or using new machine tools that include appropriate sensors when built.

Where is ML used today?

Let's look at some of these applications in more detail and see where ML is currently used in industrial automation.

Typical use cases today include:

- Machine vision – for example, for inspection and quality control, where an ML system can be trained to recognise problems. This could be as simple as a missing object on a conveyor belt.

- Decision-making – using data to automatically and quickly pick the best action in real-time, improving efficiency and reducing the scope for human error.

- Predictive maintenance – using sensor data to spot upcoming problems can minimise downtime and the associated costs.

- Helping to increase safety – identifying any events that may cause risks and acting accordingly, from shutting down a machine to avoiding collisions between robots, vehicles or people.

In practice, not every system is suitable for applying ML. There may be limitations on what data can be gathered and how it can be processed, or the cost of adding sensors, processing capabilities, power and networking may be too high. Latency is another issue, mainly where delays are introduced by sending data to and from remote computing resources or the cloud.

Challenges in integrating ML into automation

Automation vendor Beckhoff has identified five essential requirements that must all be met to integrate ML into automation successfully. These provide a useful starting point:

- Open interfaces to ensure interoperability.

- ML solutions that are simple enough to use and integrate with existing software, without expert knowledge.

- ML solutions that are reliable and accurate enough to deliver valuable results.

- Robust training methods that can handle noisy or imprecise data.

- Transparency, so the ML systems are well understood.

AI and ML projects may also be challenging to implement in practice, particularly for organisations that are not experienced in this area. According to Gartner, ‘AI projects often fail due to issues with maintainability, scalability and governance’, and there is a big step between a proof of concept and rolling out a system in production. It can be easy for projects to spiral out of control, with unrealistic expectations not being matched by scalable performance, and a lack of visibility hiding the true problems from a company's decision-makers.

It's also essential to think about the practicalities of implementing an ML system and how much computing performance is needed. It is not always immediately obvious – and for any ML system, there is a difference between the needs of the initial training process and the day-to-day improvement of the model after deployment.

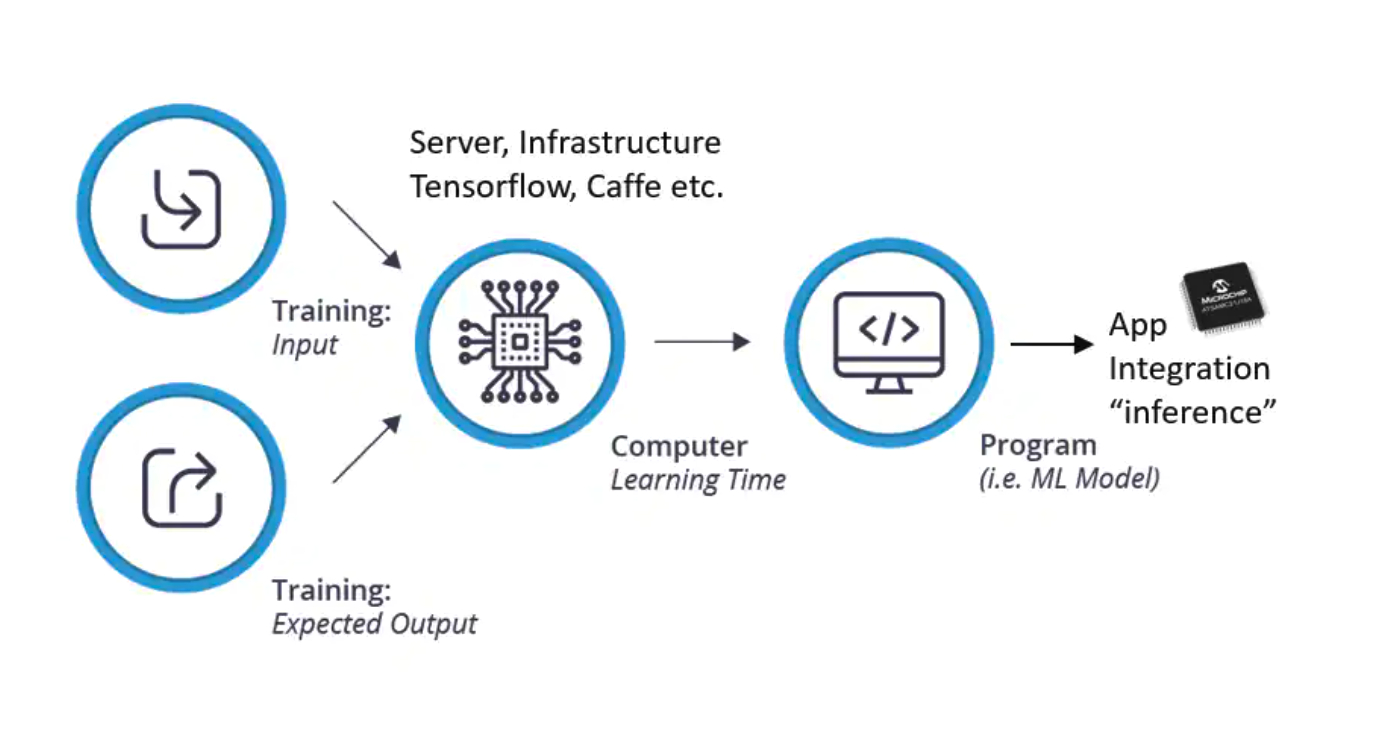

The pre-production phase may require massive datasets and intense computation, which needs powerful PCs or servers. But after a system is rolled out in the factory, the incremental improvements (obtained by what is called 'inferencing', tweaking the model based on new data) need much less performance. They are therefore eminently suitable to being handled by embedded processors.

Running the ML models locally, either in an embedded system or in a PC at the ‘edge’, has some clear advantages over sending data off to a remote cloud or central server for processing. It’s often faster, with minimal latency, and it reduces data bandwidth requirements, as well as helping ensure data security and privacy.

Gartner has a valuable concept of the ‘empowered edge’ to describe how computing resources and IoT sensors can be organised. In simple terms, ‘edge’ means that the data’s source is near where it’s processed. In terms of what ‘embedded’ means in this context, this can be right down to the endpoint level, with sensors and actuators potentially being equipped with ML capabilities.

Figure 1: Initial training runs on a computer, while embedded processors handle inferences in the field. (Source: Microchip)

ML: the why, what, where and how

ML is not necessarily the answer for everyone, but there will be clear benefits in many applications. Why should you use ML? It offers many companies improvements in efficiency, scalability, and productivity while keeping costs down. There are some concerns in areas such as ethics and liability, but for industrial operations that aren’t dealing directly with customers, these are likely to be well-controlled. In fact, IDC’s view that ‘Companies will adopt AI — not just because they can, but because they must’, is probably not an overstatement in the competitive industrial world.

To look at how to implement ML and what components you need, it's perhaps best to first consider the question of 'where' – should you be aiming to run everything locally in an embedded system or remotely in the cloud? Local is often best but consider the trade-offs carefully, including cost, latency, power consumption, security, and physical space for computing resources.

For an embedded ML system, there are many suppliers now providing suitable processors for industrial applications. Let’s look at a few examples:

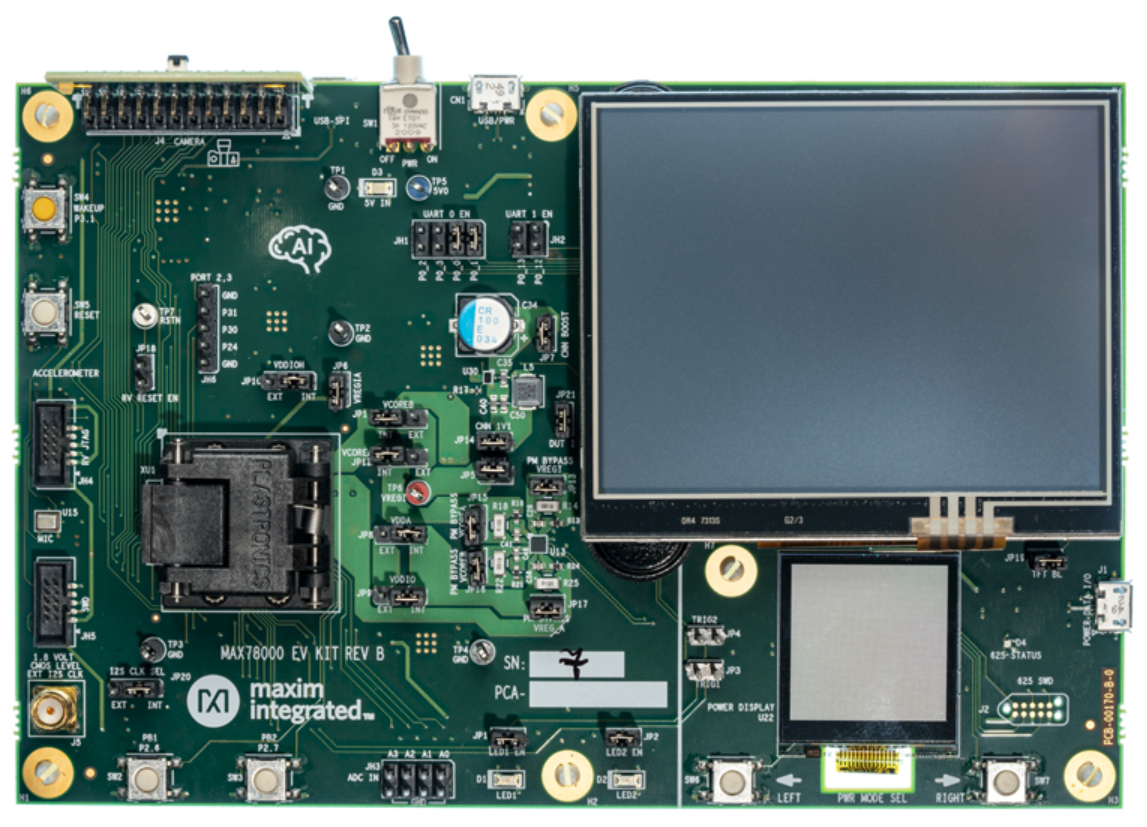

Maxim’s MAX78000 is a System on Chip (SoC) designed for AI and ML applications. It includes an Arm Cortex-M4 core with hardware-based accelerators to execute inferences, to provide high computational performance while keeping power consumption low. Maxim offers an evaluation kit and application platform to help engineers get up to speed quickly and get the most out of the MAX78000.

Another example is Microchip’s ML ecosystem, which includes the EV18H79A SAMD21 and EV45Y33A SAMD21 evaluation kits. These include sensors from TDK and Bosch, and are based around Microchip’s SAMD21G18 Arm Cortex-M0+ based 32-bit microcontroller (MCU).

One more option is NXP’s i.MX RT1060 Crossover MCU, which is based on the Arm Cortex-M7 MPCore Platform. Fully supported by NXP’s software and tools, this provides high CPU performance and a wide range of interfaces.

Figure 2: Maxim MAX7800 Evaluation Kit includes the MAX78000 SoC, as well as a colour touch screen, microphone, and gyroscope/accelerometer. (Source: Maxim Integrated)

Conclusions

It's clear that ML can be an enabling technology in industrial applications and can improve manufacturing and other processes by increasing efficiency, scalability and productivity – as well as keeping costs low.

Implementing an ML solution can be complicated and does involve consideration of multiple trade-offs. One critical decision is whether the computing resource should be either at the edge or embedded in a system, or located remotely in the cloud or a separate server.

A growing number of high-performance embedded processors can be used to implement ML in an industrial application, supported by an ecosystem of software and development tools from major vendors such as Maxim, Microchip and NXP. This means that embedding a suitable processor in the endpoints of a production system, and therefore close to your sources of data, can be a practical way of getting the most from an ML implementation.