Real-time video streaming for surveillance applications

Real-time surveillance and remote monitoring are gaining large importance in a wide range of industries such as Oil and Gas, Power Grid, Industrial Automation, and Smart Buildings. The demand for high-quality real-time video streaming for surveillance applications is higher than ever and this calls for compute-intensive image processing designs and advanced encoding and decoding techniques.

By Pramod Ramachandra, Technical Architect - Software Design, Mistral Solutions Pvt Ltd.

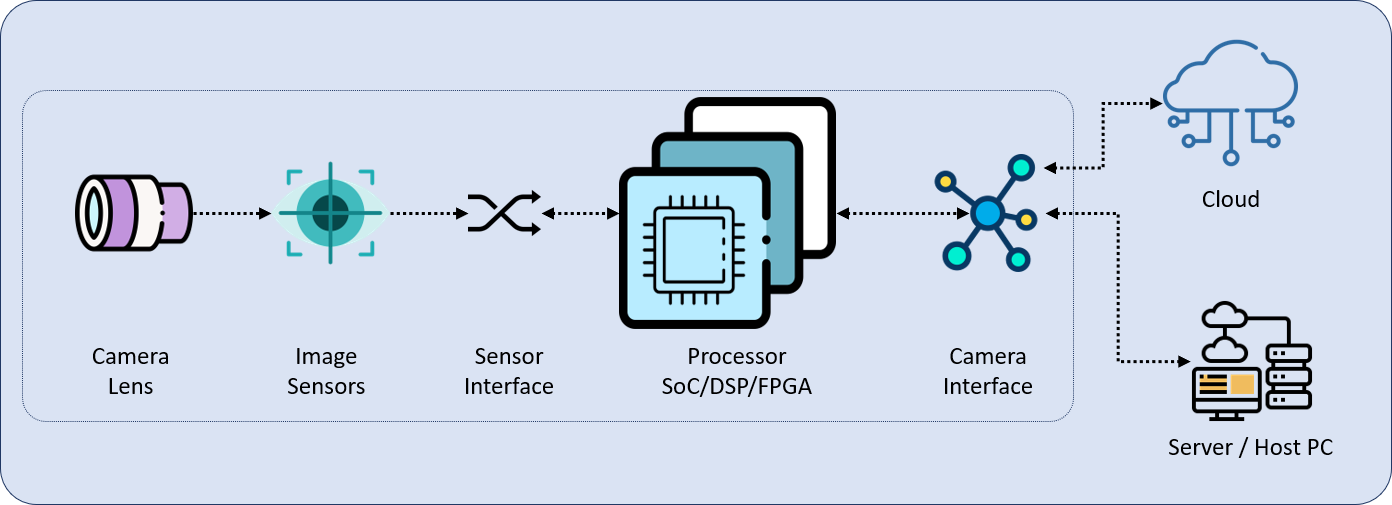

Advanced camera architectures include an onboard microprocessor or FPGA with visual analytics and image processing algorithms for pixel pre-processing and decision-making within the camera. Complemented by AI Algorithms, these advanced cameras can also facilitate real-time communication with the central command centre and stream only footages of significance to aid user-level decision making.

From the earlier days when surveillance was limited to a few analog cameras connected to PC monitors over co-axial cables, the model has evolved and attained larger scope and importance. The advent of digital technologies has helped in overcoming several disadvantages of analog systems such as complex cabling, low image quality, port limitations, low privacy and security, etc.

Rapid developments in Sensor technologies and embedded electronics, particularly the arrival of compute intensive SOCs, high-speed DSPs, high-speed wireless / wired interfaces, video streaming protocols and cloud technologies among others enabled cameras go beyond traditional surveillance and facilitate advanced AI-based vision applications such as safe-zone monitoring, No-go zone, object detection, etc.

Design and implementation of a high-speed, real-time video streaming camera requires expertise in a wide array of embedded technologies. This article is an attempt to dive a little deeper into the design of a high-end camera for surveillance applications. We explore the basics of camera architecture and video streaming along with several streaming protocols, selection of sensors and processing engines, system software requirements and cloud integration among others.

Design considerations

Key system components

Image sensors

Image sensor is the eye of a camera system which contains millions of discrete photodetector sites called pixels. All imaging sensors - used in still, movie, industrial or security cameras - use either Charge-Coupled Device (CCD) or Complementary Metal Oxide Semiconductor (CMOS) sensor technology. The fundamental role of sensors is to convert light falling on the lens into electrical signals, which in turn will be converted to digital signals by the processor of the camera.

Surveillance camera demands higher light sensitivity, which produces better low-light images. Advanced image sensors provide high-quality images with low noise due to higher dynamic range, field of view and higher shutter speeds.

Image sensors make a big impact when it comes to the performance of the camera due to various factors such as size, weight, power and cost. Choosing the right sensor is key to the design of a high-quality camera for surveillance applications. Selection of the sensor is influenced by several factors such as frame rates, resolution, pixel size, power consumption, quantum efficiency and FoV among others.

Sensor interface

Selection of sensor interface is another critical factor that impacts the performance of the camera. The MIPI CSI (1/2/3) is one of the most preferred sensor interfaces currently in use. Since MIPI CSI offers a lean integration, several leading Chip manufacturers such as NXP, NVIDIA, Qualcomm and Intel among others are adopting this technology for industrial designs. CMOS sensors with MIPI CSI interfaces enable quick data transfer from the sensor to the host SoC of the system, facilitating high-speed and system performance. Developers can also use high-speed interfaces such as GMSL and FPD Link in applications where the processing of signals happens at a different platform or system. These interfaces greatly impact the overall performance of the camera, which is key to real-time video streaming applications.

Camera interfaces

Identifying the right camera interface with high-speed video streaming feature is equally critical. A developer can rely on high-speed interfaces such as USB 3.2, CoaXPress 2.0, Thunderbolt3, DCAM, CameraLink HS (Framegrabber) and Gigabit Ethernet, among others.

Signal processing engines

There are a variety of SOMs and SBCs based on leading GPUs, SOCs and FPGAs available in the market, which are ideal for high-end camera designs. Identifying the right processor from this large pool might be a tough task. The processing engine must support the ever-evolving video encoding, decoding and application processing requirements of the camera system.

In addition, the processors should aid key camera parameter settings like auto focus, auto exposure, and white balance along with noise reduction, pixel correction and colour interpolation, among others.

The advent of hardware accelerated video encoding (GPU) and streaming is greatly enabling the imaging applications, especially surveillance and machine vision by providing breakthrough performance. GPUs offer much more computing power over a CPU, which is indispensable for high performance, real-time systems.

NVIDIA Jetson, Qualcomm Snapdragon, FPGAs from Xilinx, Intel, Microchip, Lattice Semiconductor, etc. are some of the leading platforms that greatly aid high-end cameras for vision analytics and industrial applications. Processing platforms that support hardware accelerated media encoding and decoding would be an ideal choice for camera developers.

Cameras meant for high-end surveillance applications must be of high resolution and high frame rates, industry-leading image compression and streaming capabilities, and low power consumption. Advanced low-power embedded processors with built-in video analytics provide application specific intelligence to the cameras.

These SoCs enable the system to record, stream and store only the necessary video sequences. Such processing engines are gaining high popularity among developers as they aid real-time communication with quick situational awareness for the users.

Using FPGAs, advanced DSPs and GPUs enable edge computing and offer futuristic features such as intelligent motion detection, object detection & counting and camera tamper detection, etc. aided by advanced video analytics and AI algorithms.

Cameras with edge computing is ideal for time-sensitive applications and help make intelligent decisions at the edge. Such systems are highly recommended for remote applications, where there is limited no connectivity to cloud or a central command centre.

Some of the Leading SOC makers and Processors ideal for designing real-time video streaming Cameras:

Intel

Intel Movidius: Enable product developers to deploy deep neural network and computer vision applications in intelligent cameras and augmented reality devices.

NVIDIA

NVIDIA Jetson SoMs: Provide performance and power efficiency to build intelligent machine vision enabled systems.

NXP

i.MX 8 Series Applications Processors: Scalable multicore platform for advanced graphics, imaging, voice, video, machine vision and safety-critical applications.

Qualcomm

Qualcomm APQ/QCS application processors: Highly integrated platform for compact designs that need Powerful multi-core processing, Next-generation computer vision, ideal for building high-end cameras, robotics, etc.

XILINX

Zynq UltraSCALE+: Provide real-time control with soft and hard engines for graphics, video, waveform, and packet processing.

Arm

Arm offers a series of RISC processor cores that are implemented on several SoCs and SBCs.

- Cortex A: 32-bit and 64-bit RISC ARM processor cores for smart camera designs.

- Cortex M: 32-bit RISC ARM processor cores optimised for low-cost and energy-efficient micro-controllers.

- Cortex R: 32-bit RISC ARM processor cores optimised for hard real-time and safety-critical applications.

- Cortex X: Arm RISC cores intended for high-performance AI-driven & connected cameras.

- Ethos N: Designed to meet complex Machine Learning requirements.

- Ethos U: Designed for embedded AI applications.

- Mali Camera: High-performance Image Signal Processor (ISP) cores for AI-driven, connected cameras.

System software

Operating system

Despite the emergence of several operating systems, Linux rules the embedded world. It has been the preferred embedded OS among embedded device developers for years due to its stability and networking capabilities, especially in the domains of networking and communication. Developers can use Linux for their HD Streaming Camera designs as they can leverage the numerous Linux vendors offering custom solutions based on different kernel releases.

As Linux is an open-source operating system, developers can easily and quickly make changes and redistribute it. One can meet the unique requirements of the project using Linux, while easily addressing critical factors including power consumption, data streaming, and any other challenges imposed by the hardware configuration or other software elements.

A few advantages of using Linux:

- The multi-threaded, multi-function operating system is ideal for complex applications

- Using Linux enables the developers easily release software upgrades and manage compatibility with third party systems

- As Linux is an opensource OS, it is relatively less expensive to implement/port the software. Mostly the cost will be on the development charges

- Linux allows easy customisation and integration of I/Os as per the specific requirements

- Using Linux enables faster time to market – with minimal customisation, developers can easily integrate opensource software components readily available.

Sensor drivers

Sensor driver development and integration is another crucial part of camera design. Most of the sensor modules come with drivers, however developers can also write camera sensor driver as per the specific needs of the end product.

Sensor drivers should address several key components such as clock frequencies, frame size, frame interval, signal transmission, stream controls, sensor power management and sensor control framework among others. In addition, developers have to address sensor interface design, protocol development and integration as well.

OpenCV

OpenCV, computer vision library has become the most popular image processing platform among the developers of imaging solutions in recent years. Developers can use OpenCV libraries to implement advanced image processing algorithms in their video streaming solutions. The platform offers more than 2000 algorithms related to image processing. Since OpenCV libraries are written in C++, developers can easily integrate these software stacks into their designs.

OpenCV enables the developers to focus on video capture, image processing and analysis including features like face detection and object detection. OpenCV also offers storing, reading and writing images in an n-dimensional array and performs image processing techniques such as image conversion, blur, filtering, thresholding, rotation, scaling, histogram equalisation, and many more.

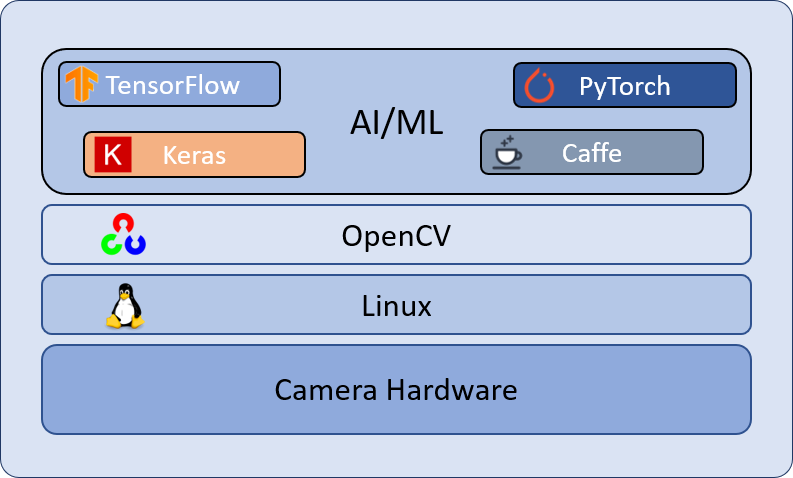

In addition to OpenCV, Tensorflow, Keras, PyTorch and Caffe are some of the popular platforms and software frameworks that can be implemented in an AI-powered streaming designs. These opensource platforms help the developer to easily integrate machine learning, deep learning and artificial neural networks into their designs. Nowadays, leading SOC manufacturers offer OpenCV and other software frameworks mentioned above as part of their SDK.

Algorithms

Algorithms can be implemented at two layers in a high performance, real-time video streaming camera design. While the first layer specifically caters to image processing, the second layer of algorithms adds intelligence to the visuals captured.

High-definition cameras capture images in high detail. However, the data captured may need further enhancements for effective analysis and accurate decision making. Implementation of visual algorithms, especially those aid in image correction and enhancement has become a minimal requirement for modern surveillance cameras. The commonly implemented algorithms include autofocus, auto exposure, histogram, colour balancing, and focus bracketing among others.

Some of the key image processing and enhancement techniques that can be implemented include:

- Bayer-to-RGB

- White balance

- Defective pixel correction

- Colour filter array interpolation

- Colour/Gamma correction

- Colour space conversion

- Image noise reduction

- Edge enhancement

- Video scaling

- Video windowing – Object tracking

- Image stabilisation

- Video compression

- Data encryption

The second layer of algorithm is implemented on advanced surveillance cameras that leverage complex visual analytics powered by AI-algorithms to provide real-time situational awareness. These systems combine video capturing, processing, video analytics and real-time communication for effective situational awareness and decision making.

These cameras are finding increased applications in automated detection of fire, smoke, people counting, safe-zone monitoring, facial recognition, human pose estimation, scene recognition, etc.

AI and ML: providing intelligence at the edge

One of the emerging trends in surveillance and industrial cameras is Smart Camera, a system with sufficient computational power and integrated AI algorithms that help make intelligent decisions at the edge. Artificial Intelligence coupled with Machine Learning and Deep Neural Networks is greatly enabling video streaming designs by providing superior intelligence to the system.

These technologies can streamline the video encoding and transmission workflow by managing the video content and reducing dependency on a human operator. AI algorithms detect, identify and classify specific cues in the video and provide required intelligence to the processors for recording, encoding and transmission of relevant data packs to the user or cloud. This reduces the transmission load and also helps the system to gather and store data of significance and slash the burden on storage infrastructure.

Key performance and feature considerations

HD / 4K Video Streaming

High-definition video streaming (4K Video Streaming) is getting more popular and becoming mature. However, when it comes to industrial and surveillance applications, the real-time HD video streaming poses major challenges because of the enormous amount of data the system has to handle. In most cases, the massive amount of data generated need to be transmitted to a remote location with minimal latency – which is one of the major challenges in HD/4K video streaming.

This can be addressed to a great extent by selecting appropriate processors, preferably FPGAs. FPGA has several benefits over conventional processors and the developers can easily integrate complex video analytics algorithms in the device. The availability of a large number of logic cells and embedded DSP blocks and flexible connectivity options make FPGA a powerhouse that can handle faster image processing and real-time HD video streaming.

Video compression

Raw video files are digitised, compressed and packetised by the processor for faster transmission to the remote monitoring system. Cameras use various video compression technologies and algorithms to compress a video, which can be further reconstructed by the host PC to its original resolution and format.

Developer can use standards such as MJPEG, MPEG-4, H.263, H.264, H.265, and H.265+ for image compression for easy transfer.

Video streaming protocols

For streaming video in real-time over a network, several video streaming protocols can be implemented in cameras. The commonly used protocols include Real-Time Streaming Protocol (RTSP), which acts as a control protocol between streaming severs (camera and the host PC) and facilitates efficient delivery of streamed multimedia, Real-time Transport Protocol (RTP), transports media stream over the network and HTTP, a protocol that provides a set of rules for transferring multimedia files including images, sound and video over IP networks. The RTSP in conjunction with RTP and RTCP, supports low-latency streaming, making it an ideal choice for high-speed streaming Camera designs.

Small form-factor, low-power design

Today, embedded product developers are competing to reduce the size of their products while increasing the efficiency manifold. The advent of low power, small form-factor multi-core SoCs, graphic accelerators and memory technologies is aiding designers to build small footprint, power-efficient cameras.

Industrial cameras, and to a great extend Surveillance cameras, demand low-power designs due to the nature of their deployment. Such cameras designed for demanding environments must offer low energy dissipation while maximising battery life.

Developers can also consider PoE for wired camera designs. Surveillance systems heavily rely on either NVRs or cloud storage to save video footage for future references and analysis. Since a physical network connectivity is important for wired applications, PoE ensures that the camera is always powered and connected. Developers can choose to use PoE or PoE+ in their design.

While PoE (IEEE 802.3af) permits 15 watts of electricity, PoE+ (IEEE 802.3at) provides 30watts. Depending on the camera configuration and application, the developer can choose the standard. PoE cameras make the installation process easier and less messy for end users, as it negotiates the need for a power cable.

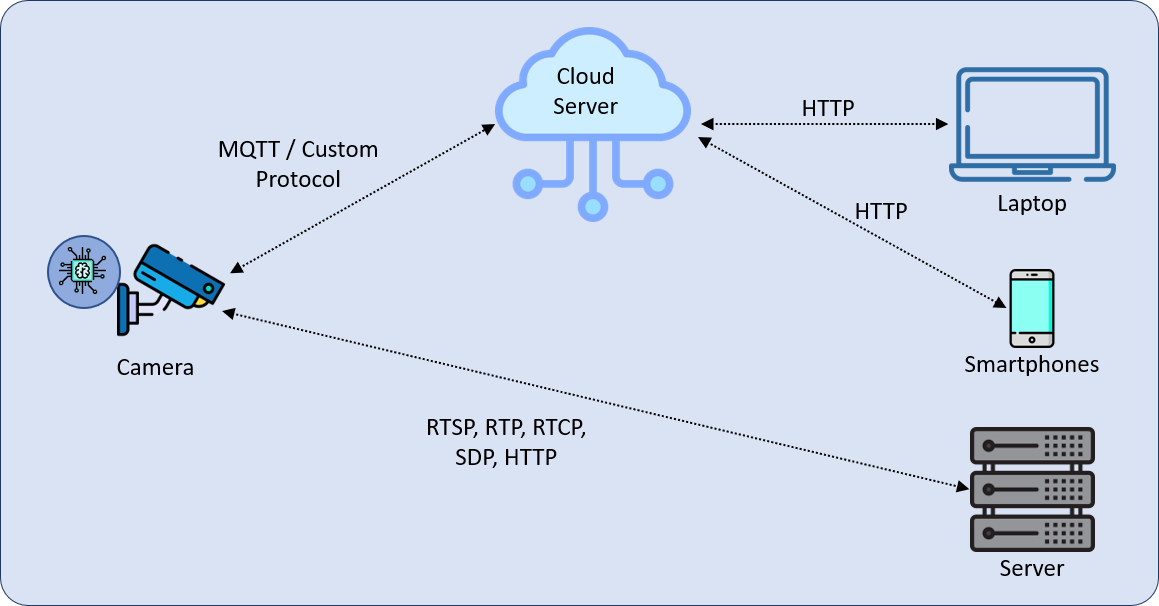

Cloud integration

Cloud provides the flexibility and versatility to deploy systems, store data across multiple locations, integrate advanced video analytics, which enhances the overall system performance. Cloud-based systems leverage over cloud computing technologies, AI algorithms and computer vision to provide cutting edge surveillance solutions.

AI algorithms provide higher intelligence to the data captured, enabling the users gather useful insights. However, unlike an edge computing device, cloud computing may have a slight disadvantage of signal latency, delaying the decision-making process by one or two seconds. Nevertheless, the advantages are many.

One of the critical challenges of video surveillance has been the need for substantial infrastructure - especially hardware for data storage and computing systems. Cloud offers an easy and affordable solution, enabling users to store and access the data anytime, anywhere without the worry of storage limitations. In addition, Cloud solutions are redundant and more reliable. AWS, Microsoft Azure, Google Cloud and IBM are among the leading cloud service providers that a developer can consider.

Video streaming designs require multiple disciplines

Real-time high-definition video streaming is a need of the hour. From Industrial robots to sophisticated drones, security and surveillance to medical applications, high-quality imaging is finding a crucial role in embedded world. Advanced cameras are capable of capturing and transferring a large amount of data, leveraging high-speed industrial interfaces and protocols. The idea of this article is to understand the fundamental components and explore key design considerations while developing a high performance, real-time video streaming camera for surveillance applications. By using leading SOCs, latest sensors and integrating various image processing and streaming platforms, one can develop a state-of-the-art camera for real-time video streaming. Mechanical design including Sensor housing, meeting environmental and temperature standards are also critical to the successful design of a camera.

As a developer one should have expertise in selection and integration of camera sensors, HD video streaming protocols, implementation of system software and sensor drivers, development and integration of camera interfaces, image signal processing, sensor tuning, image/video compression algorithms, image enhancement techniques, etc. to realise a high-end real-time video streaming design.