The many faces of autonomy

Autonomous systems conjure up a future filled with self-driving cars, humanoid robots, and buzzing drones bearing packages from Amazon. The underlying technology continues to mature at a rapid pace, and in doing so is pulling designers in hundreds of new directions. In fact, the sheer number and variety of design options raises an interesting question: what does 'autonomy' actually mean to us?

By Jim Tung, MathWorks Fellow

Practically speaking, autonomy is the power of self-governance - the ability to act independently of direct human control, and in unrehearsed conditions.

This is the major distinction between automated and autonomous systems.

An automated robot, working in a controlled environment, can place the body panel of a car in exactly the same place every time.

An autonomous robot also performs tasks it has been 'trained' to do, but can do so independently and in places it has never before ventured.

Even so, there is no one-size-fits all approach to designing - or defining - autonomous systems. In some cases, the goal is to remove human engagement; in others it’s to augment our physical and intellectual abilities.

In all instances, however, the utility of autonomous systems is bound by how much data is collected, and what value can be extracted from that data.

The following use cases provide a glimpse into the diverse nature of autonomous systems, and how the desired outcomes influence their design.

The building blocks of an autonomous system

What does it take to develop and deploy autonomous technology? Let’s start with a common example - the self-driving car.

The first requirement is to give the vehicle the ability to sense the world around it. This includes the use of cameras so the car can see where it is going and GPS so it knows where it is.

The car needs RADAR to measure distance and the relative motion of other objects, and LiDAR to build an accurate 3D model of the surrounding terrain. That’s a tremendous amount of data that must be collected, analysed and fused into a single coherent information stream that converts sensed data into perceived reality.

Taken by itself, this network of sensors may seem more like an example of automation than autonomy.

This is where machine learning and deep learning algorithms come into play, interpreting that data to determine phenomena such as lane location and the relative speed and position of other vehicles, read traffic signals, and perhaps mostly importantly, locate pedestrians.

Now that the car knows where it is and what is around it, it needs to decide what to do. If it senses an obstruction ahead, should it brake or swerve? Again, learning algorithms can be trained to react to new situations.

This is why companies like Google’s Waymo are driving millions of miles with their self-driving vehicles. They are teaching the car self-direction by training their algorithms on as many situations as possible.

How much autonomy is right for you?

Because a truly autonomous system must act without human intervention, another question arises: who or what - decides when it’s safe to change lanes or plans your best route home from work? In short, a computer does.

Autonomous technology fundamentally shifts responsibility from humans and lets us take advantage of computers and their ability to deliver business and societal benefits.

One example of this relates to a subject many might never expect would embrace autonomous technology – the field of art history.

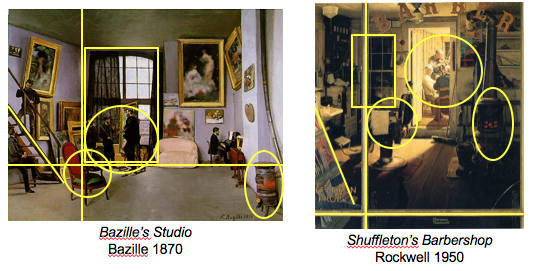

Take a look at these two paintings. Do you see the influences of one on the other? Well, a number of experienced art historians could not. Let’s look closer: both paintings include a chair, a group of three men, and a stove in a similar overall orientation.

Both paintings include a similar structural element of a rectangular window, and the composition is similar.

It turns out that a computer, not an art historian, picked up on this possible influence of French impressionist painter, Jean Frédéric Bazille, on Norman Rockwell.

The challenge of studying influence among artists and works of art is that there are practically infinite permutations of art and artists to explore.

As a result, art historians rely heavily on factors, like when and where the artists lived, and with whom they studied. If a computer could be trained to patiently compare paintings for all potential lines of influence, art historians could identify new areas of investigation they might not have previously considered.

Determining how much data is enough

There are other benefits that come from creatively applying computer perception, and interestingly, they don’t always require massive amounts of data.

In some cases, it’s more important to sort the data first to determine what will deliver the best outcome.

Take the case of Baker Hughes, one of the world's largest oil field service providers. At the heart of the company’s oil and gas extraction rigs lies a series of pumping stations.

These stations rely on mission-critical valves. The valves only cost $200 each but can trigger hundreds of thousands of dollars in repair costs if they fail.

Because of this, Baker Hughes needed a means to make better decisions on when to service its pumps in order to reduce maintenance costs and improve asset management and equipment up-time.

Rather than continuing to store multiple, idle back-up pumps at the well site, was there a way that rig operators could monitor pump health and predict failures?

As it turned out, Baker Hughes had been collecting reams of data from multiple rigs related to temperature, pressure, vibration, timing and other values. So much data, in fact, that it was difficult to extract information that would lead to an actionable outcome.

To winnow these data sets, Baker Hughes eliminated extraneous values related to things like large movements of the rig, pump and fluid. That helped them better detect the smaller, more meaningful vibrations coming from the valves and valve seats.

By drastically reducing the size of the data sets they needed to work with, the company discovered that only three sensors were required to develop perception algorithms that could distinguish a healthy pump from one that needed to be serviced.

Similar to the art history use case, autonomous technology in this instance did not take direct action, it provided the pump operator with insight into the machinery’s condition. This allowed him to make more informed maintenance decisions and determine how many back-up pumps were required at the well site - actions that drove $10m in reduced service costs.

As these examples show, autonomous systems will take as many forms as the human imagination can summon.

In each case, a series of decisions will determine form and function: how much autonomy does the system require; what is being sensed; how is that sensed data converted into perceived reality; what data - and how much - is most relevant to your outcome?

In short, what inputs will help your autonomous system make good decisions?