Living on the edge with AI

As Richard Kingston, VP, Market Intelligence, CEVA explains, we have entered the fourth Industrial Revolution. And, like the three that went before it (mechanisation; mass production and electricity; computers and automation), Industry 4.0 is set to change the way we live.

What were figments of our imagination in the past, such as men landing on the moon, have since come to fruition and today, as we envisage a world of autonomous driving and dialogue with robots, it’s not a huge leap of faith to picture what the world of tomorrow may look like.

Integral to how Industry 4.0 will shape our future is the transition of knowledge and information. Historically knowledge was stored on paper in universities and libraries, and was processed and utilised by humans. Nowadays, knowledge is stored in modern data centres where it is put to good use by devices. “It’s a huge shift in the idea of digitisation and it’s changing the world,” said Kingston.

That cloud-based knowledge, or data, is now spread across a multitude of industries and applications from financial services and healthcare to retail and in the home. The question however, is where that knowledge will be processed - in the cloud or at the edge via Artificial Intelligence (AI)? Well, the reality is it will be both. Kingston added: “In the future edge devices will be processing data and making decisions themselves and, on top of that, they will be learning as they go and will feed back into the knowledge base in the Cloud, and used as it rolls-out the next network for a particular end market. I saw some data recently that stated that, by 2022, around 50% of all AI devices will have on-device learning - if that’s right it’s a pretty big number.”

Providing AI at the edge of data transfer helps to solve issues:

- Latency: vital in safety critical use cases

- Privacy: devices shouldn’t be sending private data to the cloud

- Security: transferring data to the cloud is susceptible to data hacks

- Network coverage: no link to the cloud

- Cost

Kingston continued: “If you’re relying on connectivity to the Cloud to perform a lot of AI processing there will be a number of issues. Latency is a huge problem in the automotive industry for example. You also don’t want private data sitting in the Cloud etc.”

Edge AI will also have numerous use cases, for example:

Smart surveillance

- Face detection

- Voice biometrics

- Sound detection

- Motion sensing

- Connectivity

Automotive

- Vision sensors

- Communications

- Radar and LIDAR

- GPS

- Connectivity - Bluetooth, WiFi, cellular

- Data fusion and analysis

AI at the edge is being added via smartphones as neural network engines and accelerators start to go mainstream, which is a huge leap forward for industry adoption of edge processing. Apple and Huawei have added a dedicated neural network engine that is being used for face identification. This is all done locally on the device and has the advantages of being secure, private and immediate.

Qualcomm and NVIDIA have also announced neural engines for smartphones and other mobile devices and CEVA predict that in a few years every camera enabled device will include vision processing and neural net processing for AI. One in three smartphones will be AI capable by 2020.

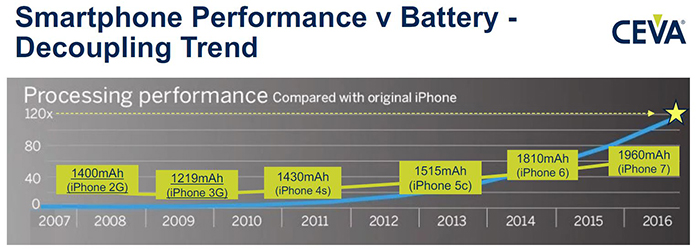

In addition, over a similar time frame, performance and power efficiency are expected to increase up to ten times. This is going to be crucial as processing performance in smartphones has increased at a vastly higher rate than battery capacity. Kingston continued: “There’s a massive disparity between what’s happening with batteries and processing power, and this only going to get worse. So when you have to add a neural network engine and run a lot of AI in real time on these type of devices, you’re going to be draining the battery faster than ever before, and if battery evolution continues at the same pace, then you’re going to have a device that’s not going to last very long. So, it’s something that needs to be addressed from both the processing aspect and the battery technology.” (see below).

The CEVA approach

There are four main elements involved in AI at the edge - communications, sensor processing, data fusion and analysis, and acting / implementing. “We approach the first three of these elements at CEVA and try and deal with them.

“Looking at the bigger picture, we address the AI market with three challenges in mind that face AI at the edge - power consumption, affordability and increasing performance requirements.” CEVA does this by enabling technologies from the devices to the infrastructure such as sound, connectivity and wireless infrastructure.

However, a key focus currently is around vision systems - computer vision DSPs, neural network, accelerators scaling from mobile to automotive, and NN framework.

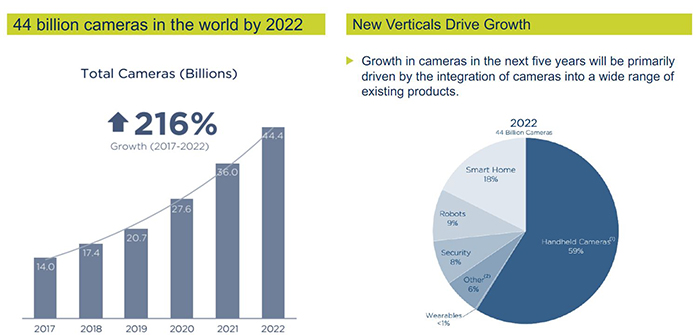

The number of cameras in the world is expected to grow by 216% between 2016 and 2022, by which time it is estimated that there will be some 44 billion camera-enabled devices. This growth will be driven by new verticals as cameras begin to be integrated into a growing number of different devices (see below).

CEVA’s CEVA-XM Vision DSP offers a holistic solution to computer vision and the workloads of AI and offers imaging and vision SW libraries, applications CEVA deep neural network and hardware accelerators to provide a scalable vision and deep learning solution. The CEVA-XM Computer Vision and NN Ecosystem is an open platform and has already achieved a number of design wins across a number of end markets including drones, surveillance, automotive, smart home and robotics.

CEVA has also recently announced a partnership with LG for its smart 3D cameras, which will employ multi-core CEVA-XM4 vision DSPs. It also enables LG to deploy in-house algorithms on merchant silicon which helps to lower costs. The company has also teamed up with startup, Brodmann 17, which provides deep learning software on embedded devices. It has been able to achieve accuracy using the CEVA-XM at a rate of 100 frames per second. CEVA claim this is 170% faster than NVIDIA’s Jetson X2.

Kingston concluded: “We are going to see a lot more AI powered devices coming onto the market - in the form of smartphones, drones, ADAS and surveillance. Also at CEVA we are working on next generation computer vision and neural networking. Particularly neural network technology as there’s still huge improvements to be made in this technology and how you build the most efficient systems to train networks in data centres.

“We want to invest in the AI ecosystem that we’re already building around our portfolio and hopefully we will also be able to buy some additional technology to add to what we already offer, so that we can help our customers to get to market quicker. We’ll see what happens over the next few years, but the days of CEVA being just a DSP-core communications company is a long way behind us now when you see the breadth of technologies that we offer in different areas.”