3D surround sensing

Josef Stockinger, Sr. Technical Marketing Manager, ADAS, STMicroelectronics, dispels some myths around the current expectations of autonomous driving.

Accident statistics show that 76% of accidents are the fault of drivers, and in 94% of accidents, some human error is involved. Therefore, customers’ expectation is that autonomous cars will make driving safer. Elon Musk claims that Tesla’s “Autopilot” makes driving much safer. On 1st

July BMW, Intel and Mobileye announced their cooperation to target production of a fully autonomous car for 2021. So why does BMW target for 2021 what Tesla already offers in 2016?

There seems to be a basic misunderstanding of what currently available state of the art sensors and autonomous driving systems are built for, and what average customers expect from marketing phrases like Tesla’s “Autopilot”. Current status and improvements coming on sensors, will be discussed in this article.

Levels of autonomous driving

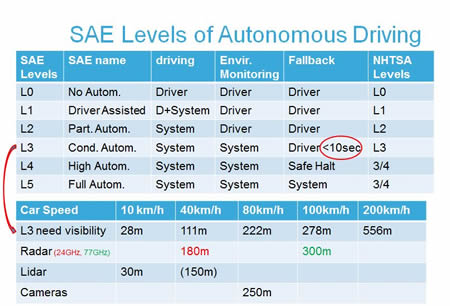

So, what do SAE/NHTSA define as levels of autonomous driving. SAE and NHTSA definitions are similar (chart left). The classifications are differentiated between driving, environment monitoring and the fallback for the automated system.

Tesla’s “Autopliot” is a level 2 system, rendering the marketing phrase “Autopilot” highly debatable. levels 3, 4 and 5 systems must be fail-operational. This needs the system’s sensors to be fail-operational as well, or redundant sensing needs to be available. The sensors need to avoid both false positive and false negative object detections. The sensors of level 1 and level 2 are not fail-operational and accept false negatives.

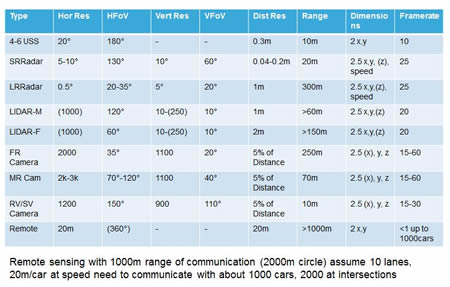

So what are the currently available sensors for level 1 and level 2 cars and what needs to improve on these for level 3 and higher? For a discussion about sensor axes, the usual car body frame axes land vehicles convention will be used, with x coordinate in the driving direction, y coordinate in the lateral direction, and z coordinate straight upwards - correct info on z is vital for the autonomous driving car.

Sensor types

Short Range Radar Sensors (SRR): These sensors are newly developed and are set to replace ultra-sonic sensors (USS) and will be used in autonomous driving systems. The system is expected to use four SRR at each corner of the car and others optionally. Mainly these SRRs use the radio frequency (RF) 79GHz band (4GHz bandwidth, 4cm x resolution), but current worldwide regulation is still limited to 77GHz (1GHz bandwidth, 20cm x resolution). Two to three transmitters are combined with four receivers in each sensor in one microwave monolithic IC (MMIC).

There is debate about integration of the baseband processing into the sensor or to use low voltage differential signaling (LVDS) interfaces like those used for camera systems and centrally process the intermediate frequency data in a central ECU. If the baseband processing is centralised, there is better processing capability available in the ECU and hence flexibility, and the power dissipation inside the radar sensor is reduced.

The development targets of SRR sensors are fail-safe and fail-operational modes, and it seems possible to reach those targets. In a mid-range mode, the SRR sensor may reach up to 80m distance at reduced resolution and increased transmitter power level.

A migration from the current 24GHz MRR to 79GHz MRR is expected. The new 79GHz SRR addresses the vertical dimension (the z axis) with limited resolution. The direct speed measurement is another advantage of any radar-based sensor versus all other sensing technologies.

Long Range Radar Sensors (LRR): Level 2 LRR designs accept false negatives to avoid false positives and are limited to 2D recognition with limited to no vertical resolution.

New LRR designs use similar MMICs like SRR designs. Several of these MMICs can be combined in an LRR to increase horizontal resolution and introduce vertical resolution. To differentiate objects on the lanes with a width of 3.5m at >200m distance, a 0.2°-0.5° horizontal resolution is needed.

With current level 2 LRRs sensors, passing a toll gate or entering a parking lot with height control may activate a short noticeable brake not intended to stop the car (risk of false positive, but to warn the driver). Most of the current LRR can’t measure the z axis so can’t differentiate between the height control metal bar with sufficient overhead clearance (e.g. 2.5m) to allow the car to pass under, and a closed bar (e.g. one metre).

Depending on the speed, a reduced VFoV and a <1.5° (five metre height resolution at 200m) vertical resolution may be preferred. New MMICs offer self-check functions to support the functional safety requirements.

RVC and SVC with 2D ground projection and static/dynamic guiding line overlays have been around for quite some time. New is virtual 3D impression video capability.

Further evolutions of RVC and SVC will use video analytics to allow low speed maneuvering during autonomous level 2 parking. High dynamic range imagers of >130dB are needed to operate in bright sunlight. The latest imager developments can support up to 150dB dynamic range with 24bits image signal processor (ISP) processing to reach 144dB dynamic range. Older RVC/SVC support some 85-105dB only.

During darkness, the image sensor requires a high sensitivity. The current reference is a minimum die illumination of 1mlx at SNR1 with 30fps. The high sensitivity combined with the wide dynamic range is reached by combining large pixels (>10µm² versus <1µm² used for standard image sensors) with the latest imager technology that offers maximum quantum efficiency. Extending the spectral range into the near infrared can further improve the sensitivity in darkness. With SfM (structure from motion) algorithms applied, a moving single camera can generate a high precision full resolution 3D image. Special pixel designs of the latest imagers allow flicker-free videos from LED and laser light in the range from the frame rate, 30Hz to kHz.

The cameras for virtual mirrors that are about to replace the traditional wing mirrors also belong to this class of vision oriented cameras. The optics of the virtual mirror cameras are slightly different from the ones of the RVC and SVC because they have to meet the same legal requirements as the mirrors.

Mid-Range Cameras (MRC) and Far-Range Cameras (FRC): The MRC and FRC are connected to a machine vision system that detects, classifies and measures distances to objects like other cars, bikes, pedestrians, road marks, side rails, bridge and overpass clearance, shoulders or driving lanes. Traffic sign recognition and traffic light detection also result from processing the videos from these cameras. The MRC’s purpose is cross traffic alert, pedestrian protection, emergency braking, lane keeping and traffic light detection, whereas the FRC supports traffic sign recognition, video adaptive cruse control and the long distance driving path finding. The machine vision system can use the RAW colour filter data as coming out of the imager. Today, RCCC colour filter arrays are used, but in the future MRC and FRC will use more elaborated colour filter arrays including IR sensitive pixels.

The main difference of MRC versus FRC is the optics used - about 35° HFoV for the FRC and 70°-120° for the MRC. The MRC optics depend on the targeted visibility for cross traffic detection and pedestrian protection. In case of the >100° HFoV, imagers in the 3MPix area will be required. As explained by Mobileye’s CTO Amnon Shashua in his keynote at the 2016 IEEE conference on computer vision and pattern recognition (CVPR2016), start of production 2020 solutions may use >7MPix imagers at 36fps to allow combining FRC and MRC with one optic. Compared to LRR that is limited in Y resolution (5m at 200m), FRC can reach few cm resolution (7cm at 250m) in both y and z.

LIDAR Sensors

LIDAR sensors may be grouped into three subgroups:

- The static or fixed beam LIDAR like the Continental ‘Emergency Brake Assist’ or the LeddarVu from Leddartech.

- The high resolution scanning LIDAR sensors that may scan in one direction only (y direction if forward looking) or in two directions (y and z) using one or two direction movable mirrors or rotating mirrors.

- The high resolution flash LIDAR sensors, that illuminate the complete scene with short strong laser pulses.

- The static LIDAR is already on the road. The resolution of the static LIDAR is very limited - from 3-32 beams in different arrangements targeting some tens of metres of range. Usually the sensor is placed behind the windscreen and often combined with a machine vision camera.

A brand new technology so far not present in regular cars is the high resolution scanning LIDAR, such as the Veldoyne LIDAR on the top of Google self-driving cars. In the future, it is expected to have one LIDAR in the front grill and one in the back and/or LIDARs at each of the four corners of the car.

The first step will be the rotating mirrors LIDAR. Further evolution of the scanning LIDAR can use MEMs mirrors, optical phase arrays (OPA) or silicon photonics chips. LIDAR works during the night, but on a sunny day the ambient light illumination can be >100k lux. The horizontal resolution of the scanning LIDAR is usually defined by the scanning laser light, even if a 2D receiver is used, because the size of the currently available receiver pixels prevents building a complete 2D receiver matrix.

The cost of a flash LIDAR sensor will depend on the cost of the 2D receiver and therefore on the pixel size as it alone defines the resolution. Pixel sensitivity and ambient light tolerance are conflicting targets.

For the higher resolution LIDAR there are two different applications. On the one hand, there is the far forward looking LIDAR (LIDAR-F) comparable to the LRR and the FRC, and on the other, there is the mid-range wide HFoV LIDAR (LIDAR-M) to be used like the MRC.

One of the biggest, and most undebatable hurdles limiting the applicable laser power for all LIDAR sensors is eye safety requirements.

Remote sensing using mobile networks or C2X: For fast autonomous level 3, 4, 5 driving, the range that on-board sensors can cover is insufficient, and therefore information from cars in front or from the road infrastructure is needed. Mobileye’s CTO Amnon Shashua in his keynote at CVPR20169 explained a technique of real-time mapping, which will be vital for autonomous driving.

Cars will collect information of the road travelled at the time of use. The range that can be covered with adequate latency, the number of details transmitted and the number of cars that can generate and receive data strongly depends on the available communication bandwidth. The amount of data needed per kilometre of road should be in a few k bytes range, so that 1,000 cars could be managed in a vicinity with reasonable latency from the collection and distribution servers.

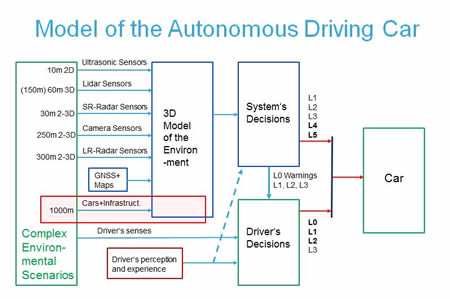

The aim of all these sensors is to generate a 3D representation of the environment that the autonomous vehicle’s decision system can use to understand the road situation. Because of the limited capability of each of the sensors, all sensor information needs to be correlated and weighted with confidence levels to build the final 3D environment. LIDAR works best during the night, when there is no ‘noise’ from the sun, but is limited in strong ambient light. Fortunately, in strong light, cameras work best, so a combination of radar, LIDAR and cameras provides enough information and redundancy to deliver reliable 3D detection in all situations.

The sensor technology to enable autonomous driving exists and is well known, but clearly, some work remains in front of the industry to achieve the safety requirements of the autonomous driving car. The BMW, Intel and Mobileye target to get a safe autonomous car on the road in 2021 seems realistic. STMicroelectronics is working with leading Tier1s on radar sensors, image sensors and LIDAR sensors to meet these requirements.